Documentation

Apache HugeGraph Documentation

Apache HugeGraph is a complete graph database ecosystem, supporting OLTP real-time queries, OLAP offline analysis, and AI intelligent applications.

Quick Navigation by Scenario

Ecosystem Overview

┌─────────────────────────────────────────────────────────────────┐

│ Apache HugeGraph Ecosystem │

├─────────────────────────────────────────────────────────────────┤

│ ┌─────────────┐ ┌─────────────┐ ┌─────────────────────────┐ │

│ │ HugeGraph │ │ HugeGraph │ │ HugeGraph-AI │ │

│ │ Server │ │ Computer │ │ (GraphRAG/ML/Python) │ │

│ │ (OLTP) │ │ (OLAP) │ │ │ │

│ └─────────────┘ └─────────────┘ └─────────────────────────┘ │

│ │ │ │ │

│ ┌──────┴───────────────┴────────────────────┴──────────────┐ │

│ │ HugeGraph Toolchain │ │

│ │ Hubble (UI) | Loader | Client (Java/Go/Python) | Tools │ │

│ └───────────────────────────────────────────────────────────┘ │

└─────────────────────────────────────────────────────────────────┘

Core Components

- HugeGraph Server - Core graph database with REST API + Gremlin + Cypher support

- HugeGraph Toolchain - Client SDKs, data import, visualization, and operational tools

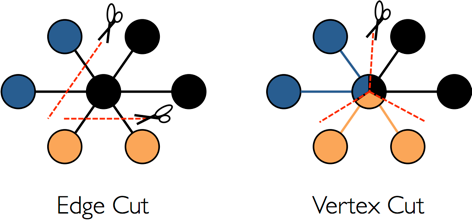

- HugeGraph Computer - Distributed graph computing (Vermeer high-performance in-memory / Computer massive external storage)

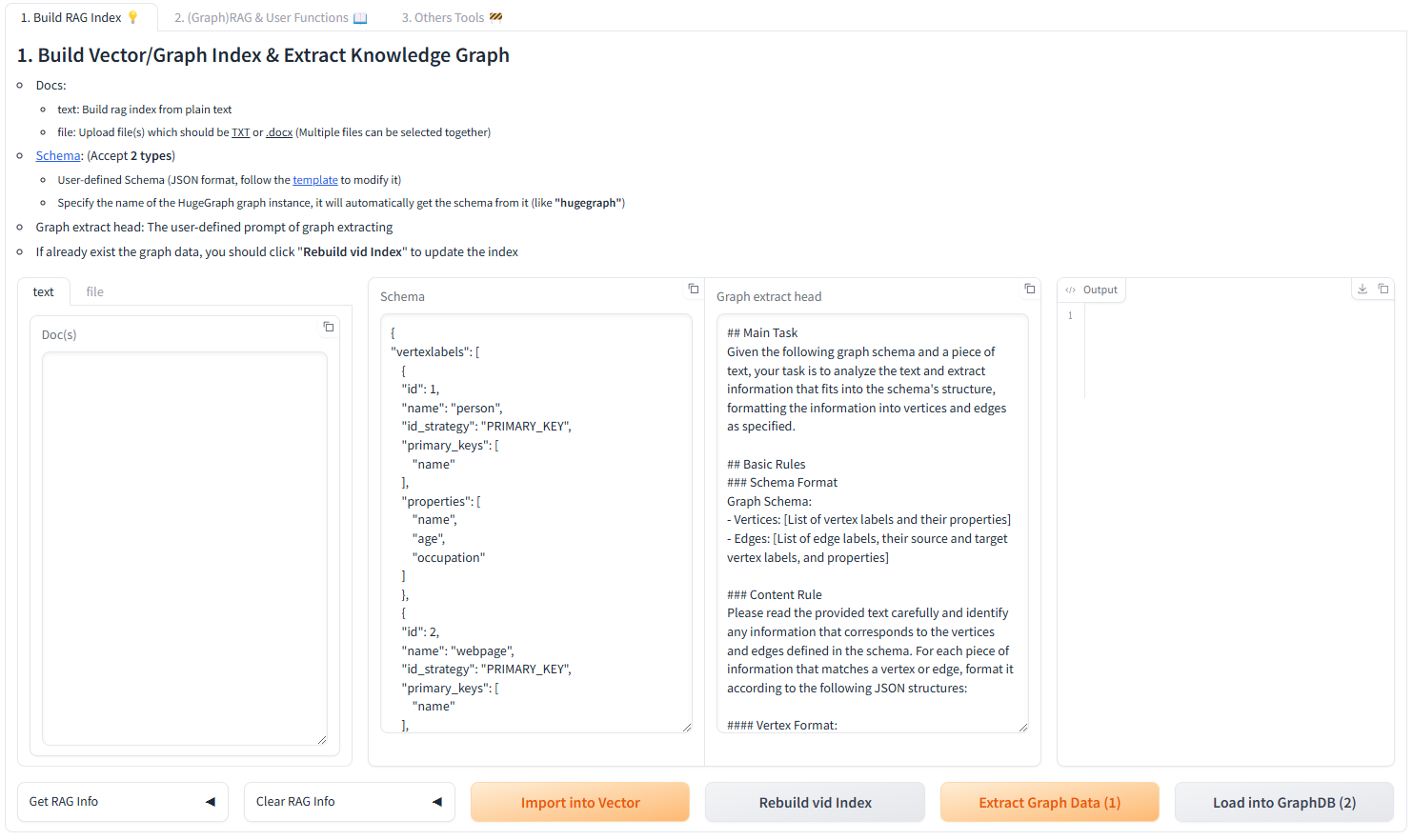

- HugeGraph-AI - GraphRAG, knowledge graph construction, 20+ graph ML algorithms

Deployment Modes

| Mode | Use Case | Data Scale |

|---|

| Standalone | High-speed stable, compute-storage integrated | < 4TB |

| Distributed | Massive storage, compute-storage separated | < 1000TB |

| Docker | Quick start | Any |

📖 Detailed Introduction

1 - Introduction with HugeGraph

Summary

Apache HugeGraph is an easy-to-use, efficient, general-purpose open-source graph database system

(Graph Database, GitHub project address), implementing the Apache TinkerPop3 framework and fully compatible with the Gremlin query language,

while also supporting the Cypher query language (OpenCypher standard).

With complete toolchain components, it helps users easily build applications and products based on graph databases. HugeGraph supports fast import of more than 10 billion vertices and edges, and provides millisecond-level relational query capability (OLTP).

It also supports large-scale distributed graph computing (OLAP).

Typical application scenarios of HugeGraph include deep relationship exploration, association analysis, path search, feature extraction, data clustering, community detection, knowledge graph, etc., and are applicable to business fields such as network security, telecommunication fraud, financial risk control, advertising recommendation, social network, and intelligence Robots, etc.

Features

HugeGraph supports graph operations in online and offline environments, batch importing of data and efficient complex relationship analysis. It can seamlessly be integrated with big data platforms.

HugeGraph supports multi-user parallel operations. Users can enter Gremlin/Cypher query statements and get graph query results in time. They can also call the HugeGraph API in user programs for graph analysis or queries.

This system has the following features:

- Ease of use: HugeGraph supports the Gremlin/Cypher graph query languages and a RESTful API, providing common interfaces for graph retrieval, and peripheral tools with complete functions to easily implement various graph-based query and analysis operations.

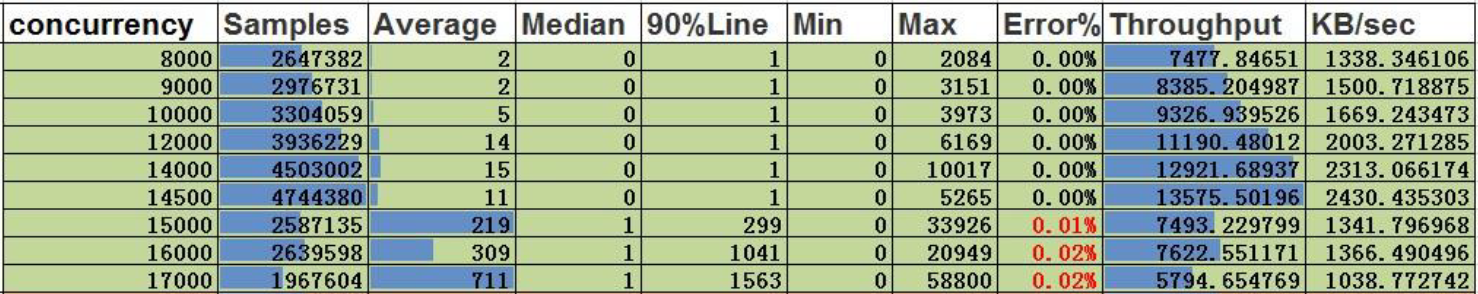

- Efficiency: HugeGraph has been deeply optimized in graph storage and graph computing, and provides a variety of batch import tools, which can easily complete the rapid import of tens of billions of data, and achieve millisecond-level response for graph retrieval through optimized queries. Supports simultaneous online real-time operations of thousands of users.

- Universal: HugeGraph supports the Apache Gremlin standard graph query language and the Property Graph standard graph modeling method, and supports graph-based OLTP and OLAP schemes. Integrate Apache Hadoop and Apache Spark big data platforms.

- Scalable: supports distributed storage, multiple copies of data, and horizontal expansion, built-in multiple back-end storage engines, and can easily expand the back-end storage engine through plug-ins.

- Open: HugeGraph code is open source (Apache 2 License), customers can modify and customize independently, and selectively give back to the open-source community.

Deployment Modes

HugeGraph supports multiple deployment modes to meet different scales and scenarios:

Standalone Mode

- Server + RocksDB backend storage

- Suitable for development, testing, and small-to-medium scale data (< 4TB)

- Docker quick start:

docker run hugegraph/hugegraph - See Server Quickstart

Distributed Mode

- HugeGraph-PD: Metadata management and cluster scheduling

- HugeGraph-Store (HStore): Distributed storage engine

- Supports horizontal scaling and high availability (< 1000TB data scale)

- Suitable for production environments and large-scale graph data applications

Quick Start Guide

| Use Case | Recommended Path |

|---|

| Quick experience | Docker deployment |

| Build OLTP applications | Server → REST API / Gremlin / Cypher |

| Graph analysis (OLAP) | Vermeer (recommended) or Computer |

| Build AI applications | HugeGraph-AI (GraphRAG/Knowledge Graph) |

| Batch data import | Loader + Hubble |

System Functions

- Supports batch import of data from multiple data sources (including local files, HDFS files, MySQL databases, and other data sources), and supports import of multiple file formats (including TXT, CSV, JSON, and other formats)

- With a visual operation interface, it can be used for operation, analysis, and display diagrams, reducing the threshold for users to use

- Optimized graph interface: shortest path (Shortest Path), K-step connected subgraph (K-neighbor), K-step to reach the adjacent point (K-out), personalized recommendation algorithm PersonalRank, etc.

- Implemented based on the Apache TinkerPop3 framework, supports Gremlin graph query language

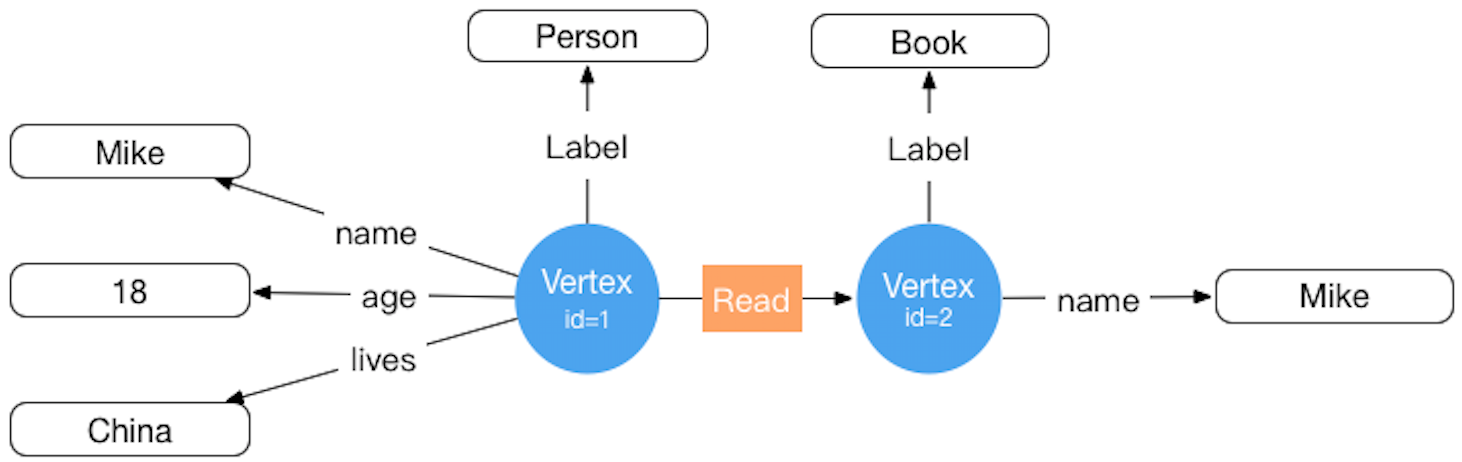

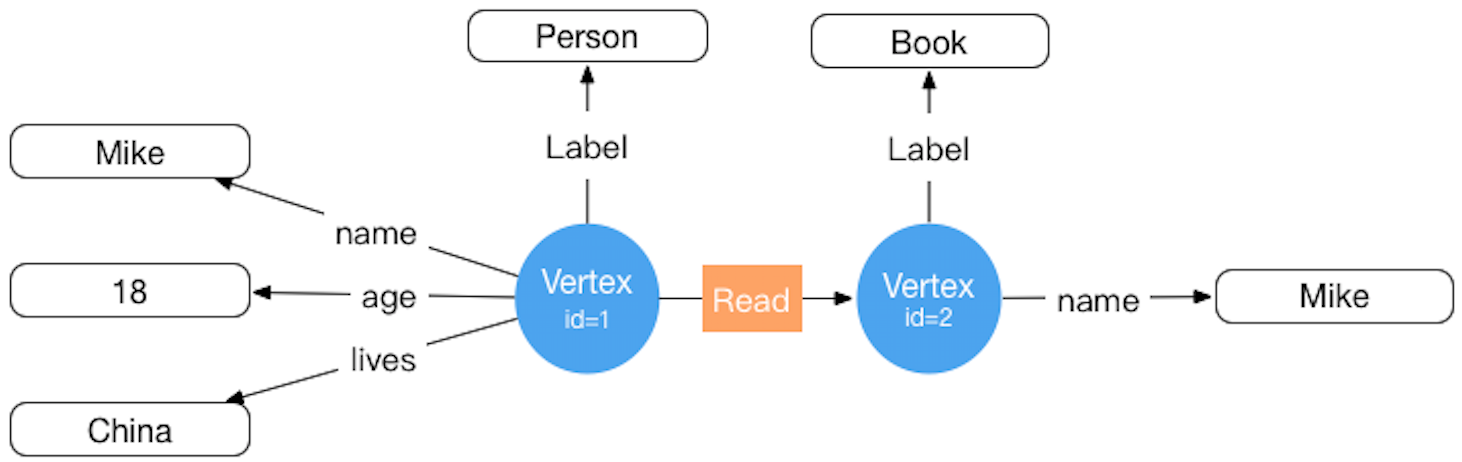

- Support attribute graph, attributes can be added to vertices and edges, and support rich attribute types

- Has independent schema metadata information, has powerful graph modeling capabilities, and facilitates third-party system integration

- Support multi-vertex ID strategy: support primary key ID, support automatic ID generation, support user-defined string ID, support user-defined digital ID

- The attributes of edges and vertices can be indexed to support precise query, range query, and full-text search

- The storage system adopts a plug-in method, supporting RocksDB (standalone/cluster), Cassandra, ScyllaDB, HBase, MySQL, PostgreSQL, Palo and Memory, etc.

- Integrated with big data systems such as HDFS, Spark/Flink, GraphX, etc., supports BulkLoad operation to import massive data.

- Supports HA(high availability), multiple data replicas, backup and recovery, monitoring, distributed Trace, etc.

Modules

- [HugeGraph-Store]: HugeGraph-Store is a distributed storage engine to manage large-scale graph data by integrating storage and computation within a unified system.

- [HugeGraph-PD]: HugeGraph-PD (Placement Driver) manages metadata and coordinates storage nodes.

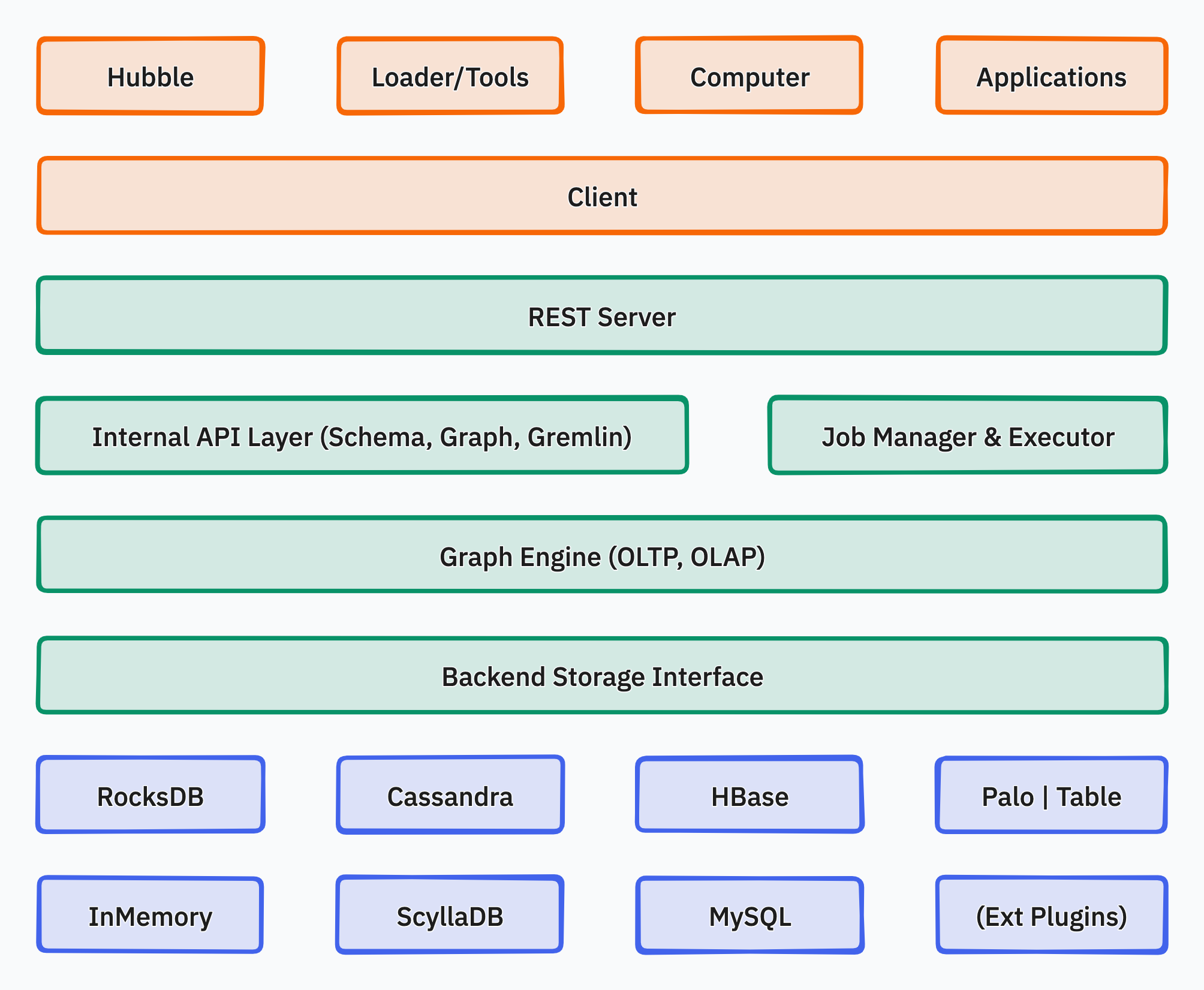

- HugeGraph-Server: HugeGraph-Server is the core part of the HugeGraph project, containing Core, Backend, API and other submodules;

- Core: Implements the graph engine, connects to the Backend module downwards, and supports the API module upwards;

- Backend: Implements the storage of graph data to the backend, supports backends including Memory, Cassandra, ScyllaDB, RocksDB, HBase, MySQL and PostgreSQL, users can choose one according to the actual situation;

- API: Built-in REST Server provides RESTful API to users and is fully compatible with Gremlin queries. (Supports distributed storage and computation pushdown)

- HugeGraph-Toolchain: (Toolchain)

- HugeGraph-Client: HugeGraph-Client provides a RESTful API client for connecting to HugeGraph-Server, supporting Java/Python/Go multi-language versions;

- HugeGraph-Loader: HugeGraph-Loader is a data import tool based on HugeGraph-Client, which transforms ordinary text data into vertices and edges of the graph and inserts them into the graph database;

- HugeGraph-Hubble: HugeGraph-Hubble is HugeGraph’s Web

visualization management platform, a one-stop visualization analysis platform, the platform covers the whole process from data modeling, to fast data import, to online and offline analysis of data, and unified management of the graph;

- HugeGraph-Tools: HugeGraph-Tools is HugeGraph’s deployment and management tool, including graph management, backup/recovery, Gremlin execution and other functions.

- HugeGraph-Computer: HugeGraph-Computer is a distributed graph processing system (OLAP).

It is an implementation of Pregel. It can run on clusters such as Kubernetes/Yarn, and supports large-scale graph computing. Also provides Vermeer lightweight graph computing engine, suitable for quick start and small-to-medium scale graph analysis.

- HugeGraph-AI: HugeGraph-AI is HugeGraph’s independent AI

component, providing LLM/GraphRAG intelligent Q&A, automated knowledge graph construction, graph neural network training/inference, Python-Client and other features, with 20+ built-in graph machine learning algorithms, continuously updating.

2 - Download Apache HugeGraph

Instructions:

- It is recommended to use the latest version of the HugeGraph software package. Please select Java11 for the runtime environment.

- To verify downloads, use the corresponding hash (SHA512), signature, and Project Signature Verification KEYS.

- Instructions for checking hash (SHA512) and signatures are on the Validate Release page, and you can also refer to ASF official instructions.

- Note: The version numbers of all components of HugeGraph have been kept consistent, and the version numbers of Maven repositories such as

client/loader/hubble/common are the same. You can refer to these for dependency references maven example. - Compatibility note: after HugeGraph graduated in January 2026, download paths moved from

/incubator/hugegraph to /hugegraph. Historical release file names may still include -incubating-.

Latest Version 1.7.0

Binary Packages

Source Packages

Please refer to build from source.

Archived Versions

Note: 1.3.0 is the last major version compatible with Java8, please switch to or migrate to Java11 as soon as possible (lower versions of Java have potentially more SEC risks and performance impacts). Starting from version 1.5.0, a Java11 runtime environment is required.

1.5.0

Binary Packages

Source Packages

1.3.0

Binary Packages

Source Packages

1.2.0

Binary Packages

Source Packages

1.0.0

Binary Packages

Source Packages

3 - Quick Start

3.1 - HugeGraph (OLTP)

DeepWiki provides real-time updated project documentation with more comprehensive and accurate content, suitable for quickly understanding the latest project information.

📖 https://deepwiki.com/apache/hugegraph

GitHub Access: https://github.com/apache/hugegraph

3.1.1 - HugeGraph-Server Quick Start

1 HugeGraph-Server Overview

HugeGraph-Server is the core part of the HugeGraph Project, contains submodules such as graph-core, backend, API.

The Core Module is an implementation of the Tinkerpop interface; The Backend module is used to save the graph data to the data store. For version 1.7.0+, supported backends include: RocksDB (standalone default), HStore (distributed), HBase, and Memory. The API Module provides HTTP Server, which converts Client’s HTTP request into a call to Core Module.

⚠️ Important Change: Starting from version 1.7.0, legacy backends such as MySQL, PostgreSQL, Cassandra, and ScyllaDB have been removed. If you need to use these backends, please use version 1.5.x or earlier.

There will be two spellings HugeGraph-Server and HugeGraphServer in the document, and other

modules are similar. There is no big difference in the meaning of these two ways,

which can be distinguished as follows: HugeGraph-Server represents the code of server-related

components, HugeGraphServer represents the service process.

2 Dependency for Building/Running

2.1 Install Java 11 (JDK 11)

You need to use Java 11 to run HugeGraph-Server (compatible with Java 8 before 1.5.0, but not recommended to use),

and configure by yourself.

Be sure to execute the java -version command to check the jdk version before reading

Note: Using Java8 will lose some security guarantees, we recommend using Java11 in production

3 Deploy

There are four ways to deploy HugeGraph-Server components:

- Method 1: Use Docker container (Convenient for Test/Dev)

- Method 2: Download the binary tarball

- Method 3: Source code compilation

- Method 4: One-click deployment

Note: If it’s exposed to the public network, must enable Auth authentication to ensure safety (so as the legacy version).

3.1 Use Docker container (Convenient for Test/Dev)

You can refer to the Docker deployment guide.

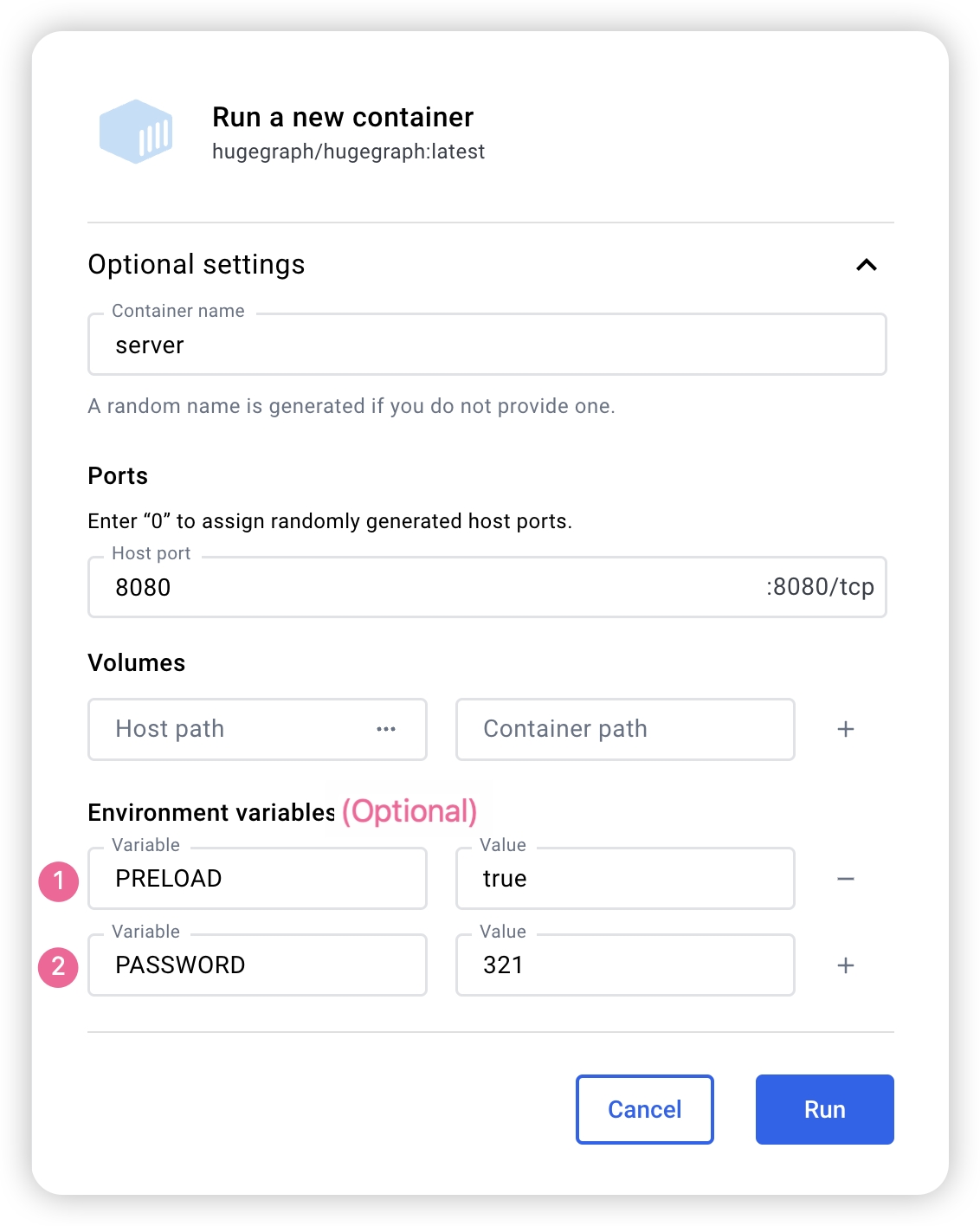

We can use docker run -itd --name=server -p 8080:8080 -e PASSWORD=xxx hugegraph/hugegraph:1.7.0 to quickly start a HugeGraph Server with a built-in RocksDB backend.

Optional:

- use

docker exec -it graph bash to enter the container to do some operations. - use

docker run -itd --name=graph -p 8080:8080 -e PRELOAD="true" hugegraph/hugegraph:1.7.0 to start with a built-in example graph. We can use RESTful API to verify the result. The detailed step can refer to 5.1.8 - use

-e PASSWORD=xxx to enable auth mode and set the password for admin. You can find more details from Config Authentication

If you use docker desktop, you can set the option like:

Also, if we want to manage the other Hugegraph related instances in one file, we can use docker-compose to deploy, with the command docker-compose up -d (you can config only server). Here is an example docker-compose.yml:

version: '3'

services:

server:

image: hugegraph/hugegraph:1.7.0

container_name: server

environment:

- PASSWORD=xxx

# PASSWORD is an option to enable auth mode with the password you set.

# - PRELOAD=true

# PRELOAD is a option to preload a build-in sample graph when initializing.

ports:

- 8080:8080

Note:

The docker image of the hugegraph is a convenient release to start it quickly, but not official distribution artifacts. You can find more details from ASF Release Distribution Policy.

Recommend to use release tag (like 1.7.0/1.x.0) for the stable version. Use latest tag to experience the newest functions in development.

3.2 Download the binary tar tarball

You could download the binary tarball from the download page of the ASF site like this:

# use the latest version, here is 1.7.0 for example

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}.tar.gz

tar zxf *hugegraph*.tar.gz

# (Optional) verify the integrity with SHA512 (recommended)

shasum -a 512 apache-hugegraph-incubating-{version}.tar.gz

curl https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}.tar.gz.sha512

3.3 Source code compilation

Please ensure that the wget/curl commands are installed before compiling the source code

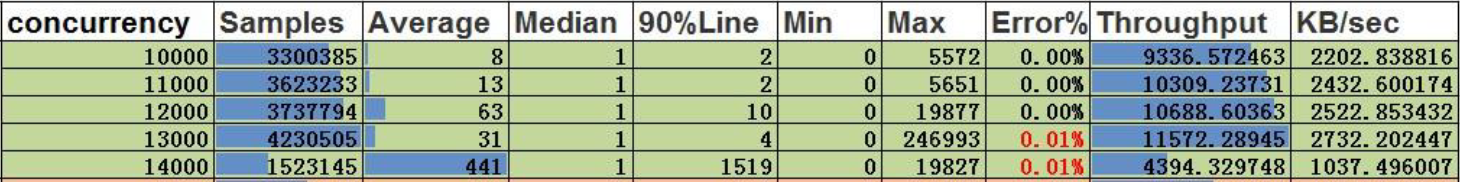

Download HugeGraph source code in either of the following 2 ways (so as the other HugeGraph repos/modules):

- download the stable/release version from the ASF site

- clone the unstable/latest version by GitBox(ASF) or GitHub

# Way 1. download release package from the ASF site

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-src-{version}.tar.gz

tar zxf *hugegraph*.tar.gz

# (Optional) verify the integrity with SHA512 (recommended)

shasum -a 512 apache-hugegraph-incubating-src-{version}.tar.gz

curl https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}-src.tar.gz.sha512

# Way2 : clone the latest code by git way (e.g GitHub)

git clone https://github.com/apache/hugegraph.git

Compile and generate tarball

cd *hugegraph

# (Optional) use "-P stage" param if you build failed with the latest code(during pre-release period)

mvn package -DskipTests -ntp

The execution log is as follows:

......

[INFO] Reactor Summary for hugegraph 1.5.0:

[INFO]

[INFO] hugegraph .......................................... SUCCESS [ 2.405 s]

[INFO] hugegraph-core ..................................... SUCCESS [ 13.405 s]

[INFO] hugegraph-api ...................................... SUCCESS [ 25.943 s]

[INFO] hugegraph-cassandra ................................ SUCCESS [ 54.270 s]

[INFO] hugegraph-scylladb ................................. SUCCESS [ 1.032 s]

[INFO] hugegraph-rocksdb .................................. SUCCESS [ 34.752 s]

[INFO] hugegraph-mysql .................................... SUCCESS [ 1.778 s]

[INFO] hugegraph-palo ..................................... SUCCESS [ 1.070 s]

[INFO] hugegraph-hbase .................................... SUCCESS [ 32.124 s]

[INFO] hugegraph-postgresql ............................... SUCCESS [ 1.823 s]

[INFO] hugegraph-dist ..................................... SUCCESS [ 17.426 s]

[INFO] hugegraph-example .................................. SUCCESS [ 1.941 s]

[INFO] hugegraph-test ..................................... SUCCESS [01:01 min]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

......

After successful execution, *hugegraph-*.tar.gz files will be generated in the hugegraph directory, which is the tarball generated by compilation.

Outdated tools

#### 3.4 One-click deployment (Outdated)HugeGraph-Tools provides a command-line tool for one-click deployment, users can use this tool to quickly download, decompress, configure and start HugeGraphServer and HugeGraph-Hubble with one click.

Of course, you should download the tarball of HugeGraph-Toolchain first.

# download toolchain binary package, it includes loader + tool + hubble

# please check the latest version (e.g. here is 1.7.0)

wget https://downloads.apache.org/hugegraph/1.7.0/apache-hugegraph-toolchain-incubating-1.7.0.tar.gz

tar zxf *hugegraph-*.tar.gz

# enter the tool's package

cd *hugegraph*/*tool*

note: ${version} is the version, The latest version can refer to Download Page, or click the link to download directly from the Download page

The general entry script for HugeGraph-Tools is bin/hugegraph, Users can use the help command to view its usage, here only the commands for one-click deployment are introduced.

bin/hugegraph deploy -v {hugegraph-version} -p {install-path} [-u {download-path-prefix}]

{hugegraph-version} indicates the version of HugeGraphServer and HugeGraphStudio to be deployed, users can view the conf/version-mapping.yaml file for version information, {install-path} specify the installation directory of HugeGraphServer and HugeGraphStudio, {download-path-prefix} optional, specify the download address of HugeGraphServer and HugeGraphStudio tarball, use default download URL if not provided, for example, to start HugeGraph-Server and HugeGraphStudio version 0.6, write the above command as bin/hugegraph deploy -v 0.6 -p services.

4 Config

If you need to quickly start HugeGraph just for testing, then you only need to modify a few configuration items (see next section).

For detailed configuration introduction, please refer to configuration document and introduction to configuration items

5 Startup

5.1 Use a startup script to startup

The startup is divided into “first startup” and “non-first startup.” This distinction is because the back-end database needs to be initialized before the first startup, and then the service is started.

after the service is stopped artificially, or when the service needs to be started again for other reasons, because the backend database is persistent, you can start the service directly.

When HugeGraphServer starts, it will connect to the backend storage and try to check the version number of the backend storage. If the backend is not initialized or the backend has been initialized but the version does not match (old version data), HugeGraphServer will fail to start and give an error message.

If you need to access HugeGraphServer externally, please modify the restserver.url configuration item of rest-server.properties

(default is http://127.0.0.1:8080), change to machine name or IP address.

Since the configuration (hugegraph.properties) and startup steps required by various backends are slightly different, the following will introduce the configuration and startup of each backend one by one.

Note: Configure Server Authentication before starting HugeGraphServer if you need Auth mode (especially for production or public network environments).

5.1.1 Distributed Storage (HStore)

Click to expand/collapse Distributed Storage configuration and startup method

Distributed storage is a new feature introduced after HugeGraph 1.5.0, which implements distributed data storage and computation based on HugeGraph-PD and HugeGraph-Store components.

To use the distributed storage engine, you need to deploy HugeGraph-PD and HugeGraph-Store first. See HugeGraph-PD Quick Start and HugeGraph-Store Quick Start.

After ensuring that both PD and Store services are started, modify the hugegraph.properties configuration of HugeGraph-Server:

backend=hstore

serializer=binary

task.scheduler_type=distributed

# PD service address, multiple PD addresses are separated by commas, configure PD's RPC port

pd.peers=127.0.0.1:8686,127.0.0.1:8687,127.0.0.1:8688

# Simple example (with authentication)

gremlin.graph=org.apache.hugegraph.auth.HugeFactoryAuthProxy

# Specify storage backend hstore

backend=hstore

serializer=binary

store=hugegraph

# Specify the task scheduler (for versions 1.7.0 and earlier, hstore storage is required)

task.scheduler_type=distributed

# pd config

pd.peers=127.0.0.1:8686

Then enable PD discovery in rest-server.properties (required for every HugeGraph-Server node):

usePD=true

# notice: must have this conf in 1.7.0

pd.peers=127.0.0.1:8686,127.0.0.1:8687,127.0.0.1:8688

# If auth is needed

# auth.authenticator=org.apache.hugegraph.auth.StandardAuthenticator

If configuring multiple HugeGraph-Server nodes, you need to modify the rest-server.properties configuration file for each node, for example:

Node 1 (Master node):

usePD=true

restserver.url=http://127.0.0.1:8081

gremlinserver.url=http://127.0.0.1:8181

pd.peers=127.0.0.1:8686

rpc.server_host=127.0.0.1

rpc.server_port=8091

server.id=server-1

server.role=master

Node 2 (Worker node):

usePD=true

restserver.url=http://127.0.0.1:8082

gremlinserver.url=http://127.0.0.1:8182

pd.peers=127.0.0.1:8686

rpc.server_host=127.0.0.1

rpc.server_port=8092

server.id=server-2

server.role=worker

Also, you need to modify the port configuration in gremlin-server.yaml for each node:

Node 1:

host: 127.0.0.1

port: 8181

Node 2:

host: 127.0.0.1

port: 8182

Initialize the database:

cd *hugegraph-${version}

bin/init-store.sh

Start the Server:

The startup sequence for using the distributed storage engine is:

- Start HugeGraph-PD

- Start HugeGraph-Store

- Initialize the database (only for the first time)

- Start HugeGraph-Server

Verify that the service is started properly:

curl http://localhost:8081/graphs

# Should return: {"graphs":["hugegraph"]}

The sequence to stop the services should be the reverse of the startup sequence:

- Stop HugeGraph-Server

- Stop HugeGraph-Store

- Stop HugeGraph-PD

5.1.2 Memory

Click to expand/collapse Memory configuration and startup methods

Update hugegraph.properties

backend=memory

serializer=text

The data of the Memory backend is stored in memory and cannot be persisted. It does not need to initialize the backend. This is the only backend that does not require initialization.

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

The prompted url is the same as the restserver.url configured in rest-server.properties

5.1.3 RocksDB / ToplingDB

Click to expand/collapse RocksDB configuration and startup methods

RocksDB is an embedded database that does not require manual installation and deployment. GCC version >= 4.3.0 (GLIBCXX_3.4.10) is required. If not, GCC needs to be upgraded in advance

Update hugegraph.properties

backend=rocksdb

serializer=binary

rocksdb.data_path=.

rocksdb.wal_path=.

Initialize the database (required on the first startup, or a new configuration was manually added under ‘conf/graphs/’)

cd *hugegraph-${version}

bin/init-store.sh

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

ToplingDB (Beta): As a high-performance alternative to RocksDB, please refer to the configuration guide: ToplingDB Quick Start

5.1.4 Cassandra

⚠️ Deprecated: This backend has been removed starting from HugeGraph 1.7.0. If you need to use it, please refer to version 1.5.x documentation.

Click to expand/collapse Cassandra configuration and startup methods

users need to install Cassandra by themselves, requiring version 3.0 or above, download link

Update hugegraph.properties

backend=cassandra

serializer=cassandra

# cassandra backend config

cassandra.host=localhost

cassandra.port=9042

cassandra.username=

cassandra.password=

#cassandra.connect_timeout=5

#cassandra.read_timeout=20

#cassandra.keyspace.strategy=SimpleStrategy

#cassandra.keyspace.replication=3

Initialize the database (required on the first startup, or a new configuration was manually added under ‘conf/graphs/’)

cd *hugegraph-${version}

bin/init-store.sh

Initing HugeGraph Store...

2017-12-01 11:26:51 1424 [main] [INFO ] org.apache.hugegraph.HugeGraph [] - Opening backend store: 'cassandra'

2017-12-01 11:26:52 2389 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph, try init keyspace later

2017-12-01 11:26:52 2472 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph, try init keyspace later

2017-12-01 11:26:52 2557 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph, try init keyspace later

2017-12-01 11:26:53 2797 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_graph

2017-12-01 11:26:53 2945 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_schema

2017-12-01 11:26:53 3044 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_index

2017-12-01 11:26:53 3046 [pool-3-thread-1] [INFO ] org.apache.hugegraph.backend.Transaction [] - Clear cache on event 'store.init'

2017-12-01 11:26:59 9720 [main] [INFO ] org.apache.hugegraph.HugeGraph [] - Opening backend store: 'cassandra'

2017-12-01 11:27:00 9805 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph1, try init keyspace later

2017-12-01 11:27:00 9886 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph1, try init keyspace later

2017-12-01 11:27:00 9955 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph1, try init keyspace later

2017-12-01 11:27:00 10175 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_graph

2017-12-01 11:27:00 10321 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_schema

2017-12-01 11:27:00 10413 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_index

2017-12-01 11:27:00 10413 [pool-3-thread-1] [INFO ] org.apache.hugegraph.backend.Transaction [] - Clear cache on event 'store.init'

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

5.1.5 ScyllaDB

⚠️ Deprecated: This backend has been removed starting from HugeGraph 1.7.0. If you need to use it, please refer to version 1.5.x documentation.

Click to expand/collapse ScyllaDB configuration and startup methods

users need to install ScyllaDB by themselves, version 2.1 or above is recommended, download link

Update hugegraph.properties

backend=scylladb

serializer=scylladb

# cassandra backend config

cassandra.host=localhost

cassandra.port=9042

cassandra.username=

cassandra.password=

#cassandra.connect_timeout=5

#cassandra.read_timeout=20

#cassandra.keyspace.strategy=SimpleStrategy

#cassandra.keyspace.replication=3

Since the scylladb database itself is an “optimized version” based on cassandra, if the user does not have scylladb installed, they can also use cassandra as the backend storage directly. They only need to change the backend and serializer to scylladb, and the host and post point to the seeds and port of the cassandra cluster. Yes, but it is not recommended to do so, it will not take advantage of scylladb itself.

Initialize the database (required on the first startup, or a new configuration was manually added under ‘conf/graphs/’)

cd *hugegraph-${version}

bin/init-store.sh

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

5.1.6 HBase

Click to expand/collapse HBase configuration and startup methods

users need to install HBase by themselves, requiring version 2.0 or above,download link

Update hugegraph.properties

backend=hbase

serializer=hbase

# hbase backend config

hbase.hosts=localhost

hbase.port=2181

# Note: recommend to modify the HBase partition number by the actual/env data amount & RS amount before init store

# it may influence the loading speed a lot

#hbase.enable_partition=true

#hbase.vertex_partitions=10

#hbase.edge_partitions=30

Initialize the database (required on the first startup, or a new configuration was manually added under ‘conf/graphs/’)

cd *hugegraph-${version}

bin/init-store.sh

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

for more other backend configurations, please refer tointroduction to configuration options

5.1.7 MySQL

⚠️ Deprecated: This backend has been removed starting from HugeGraph 1.7.0. If you need to use it, please refer to version 1.5.x documentation.

Click to expand/collapse MySQL configuration and startup methods

Because MySQL is licensed under the GPL and incompatible with the Apache License, users must install MySQL themselves, download link

Download the MySQL driver package, such as mysql-connector-java-8.0.30.jar, and place it in the lib directory of HugeGraph-Server.

Update hugegraph.properties to configure the database URL, username, and password.

store is the database name; it will be created automatically if it doesn’t exist.

backend=mysql

serializer=mysql

store=hugegraph

# mysql backend config

jdbc.driver=com.mysql.cj.jdbc.Driver

jdbc.url=jdbc:mysql://127.0.0.1:3306

jdbc.username=

jdbc.password=

jdbc.reconnect_max_times=3

jdbc.reconnect_interval=3

jdbc.ssl_mode=false

Initialize the database (required for first startup or when manually adding new configurations to conf/graphs/)

cd *hugegraph-${version}

bin/init-store.sh

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

5.1.8 Create an example graph when startup

Carry the -p true arguments when starting the script, which indicates preload, to create a sample graph.

bin/start-hugegraph.sh -p true

Starting HugeGraphServer in daemon mode...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)......OK

And use the RESTful API to request HugeGraphServer and get the following result:

> curl "http://localhost:8080/graphs/hugegraph/graph/vertices" | gunzip

{"vertices":[{"id":"2:lop","label":"software","type":"vertex","properties":{"name":"lop","lang":"java","price":328}},{"id":"1:josh","label":"person","type":"vertex","properties":{"name":"josh","age":32,"city":"Beijing"}},{"id":"1:marko","label":"person","type":"vertex","properties":{"name":"marko","age":29,"city":"Beijing"}},{"id":"1:peter","label":"person","type":"vertex","properties":{"name":"peter","age":35,"city":"Shanghai"}},{"id":"1:vadas","label":"person","type":"vertex","properties":{"name":"vadas","age":27,"city":"Hongkong"}},{"id":"2:ripple","label":"software","type":"vertex","properties":{"name":"ripple","lang":"java","price":199}}]}

This indicates the successful creation of the sample graph.

5.2 Use Docker to startup

In 3.1 Use Docker container, we have introduced how to use docker to deploy hugegraph-server. server can also preload an example graph by setting the parameter.

5.2.1 Uses Cassandra as storage

⚠️ Deprecated: Cassandra backend has been removed starting from HugeGraph 1.7.0. If you need to use it, please refer to version 1.5.x documentation.

Click to expand/collapse Cassandra configuration and startup methods

When using Docker, we can use Cassandra as the backend storage. We highly recommend using docker-compose directly to manage both the server and Cassandra.

The sample docker-compose.yml can be obtained on GitHub, and you can start it with docker-compose up -d. (If using Cassandra 4.0 as the backend storage, it takes approximately two minutes to initialize. Please be patient.)

version: "3"

services:

graph:

image: hugegraph/hugegraph

container_name: cas-server

ports:

- 8080:8080

environment:

hugegraph.backend: cassandra

hugegraph.serializer: cassandra

hugegraph.cassandra.host: cas-cassandra

hugegraph.cassandra.port: 9042

networks:

- ca-network

depends_on:

- cassandra

healthcheck:

test: ["CMD", "bin/gremlin-console.sh", "--" ,"-e", "scripts/remote-connect.groovy"]

interval: 10s

timeout: 30s

retries: 3

cassandra:

image: cassandra:4

container_name: cas-cassandra

ports:

- 7000:7000

- 9042:9042

security_opt:

- seccomp:unconfined

networks:

- ca-network

healthcheck:

test: ["CMD", "cqlsh", "--execute", "describe keyspaces;"]

interval: 10s

timeout: 30s

retries: 5

networks:

ca-network:

volumes:

hugegraph-data:

In this YAML file, configuration parameters related to Cassandra need to be passed as environment variables in the format of hugegraph.<parameter_name>.

Specifically, in the configuration file hugegraph.properties , there are settings like backend=xxx and cassandra.host=xxx. To configure these settings during the process of passing environment variables, we need to prepend hugegraph. to these configurations, like hugegraph.backend and hugegraph.cassandra.host.

The rest of the configurations can be referenced under 4 config

5.2.2 Create an example graph when starting a server

Set the environment variable PRELOAD=true when starting Docker to load data during the execution of the startup script.

Use docker run

Use docker run -itd --name=server -p 8080:8080 -e PRELOAD=true hugegraph/hugegraph:1.7.0

Use docker-compose

Create docker-compose.yml as following. We should set the environment variable PRELOAD=true. example.groovy is a predefined script to preload the sample data. If needed, we can mount a new example.groovy to change the preload data.

version: '3'

services:

server:

image: hugegraph/hugegraph:1.7.0

container_name: server

environment:

- PRELOAD=true

- PASSWORD=xxx

ports:

- 8080:8080

Use docker-compose up -d to start the container

And use the RESTful API to request HugeGraphServer and get the following result:

> curl "http://localhost:8080/graphs/hugegraph/graph/vertices" | gunzip

{"vertices":[{"id":"2:lop","label":"software","type":"vertex","properties":{"name":"lop","lang":"java","price":328}},{"id":"1:josh","label":"person","type":"vertex","properties":{"name":"josh","age":32,"city":"Beijing"}},{"id":"1:marko","label":"person","type":"vertex","properties":{"name":"marko","age":29,"city":"Beijing"}},{"id":"1:peter","label":"person","type":"vertex","properties":{"name":"peter","age":35,"city":"Shanghai"}},{"id":"1:vadas","label":"person","type":"vertex","properties":{"name":"vadas","age":27,"city":"Hongkong"}},{"id":"2:ripple","label":"software","type":"vertex","properties":{"name":"ripple","lang":"java","price":199}}]}

This indicates the successful creation of the sample graph.

6. Access server

6.1 Service startup status check

Use jps to see a service process

curl request RESTfulAPI

echo `curl -o /dev/null -s -w %{http_code} "http://localhost:8080/graphs/hugegraph/graph/vertices"`

Return 200, which means the server starts normally.

6.2 Request Server

The RESTful API of HugeGraphServer includes various types of resources, typically including graph, schema, gremlin, traverser and task.

graph contains vertices、edgesschema contains vertexlabels、 propertykeys、 edgelabels、indexlabelsgremlin contains various Gremlin statements, such as g.v(), which can be executed synchronously or asynchronouslytraverser contains various advanced queries including shortest paths, intersections, N-step reachable neighbors, etc.task contains query and delete with asynchronous tasks

curl http://localhost:8080/graphs/hugegraph/graph/vertices

explanation

Since there are many vertices and edges in the graph, for list-type requests, such as getting all vertices, getting all edges, etc., the server will compress the data and return it, so when use curl, you get a bunch of garbled characters, you can redirect to gunzip for decompression. It is recommended to use the Chrome browser + Restlet plugin to send HTTP requests for testing.

curl "http://localhost:8080/graphs/hugegraph/graph/vertices" | gunzip

The current default configuration of HugeGraphServer can only be accessed locally, and the configuration can be modified so that it can be accessed on other machines.

vim conf/rest-server.properties

restserver.url=http://0.0.0.0:8080

response body:

{

"vertices": [

{

"id": "2lop",

"label": "software",

"type": "vertex",

"properties": {

"price": [

{

"id": "price",

"value": 328

}

],

"name": [

{

"id": "name",

"value": "lop"

}

],

"lang": [

{

"id": "lang",

"value": "java"

}

]

}

},

{

"id": "1josh",

"label": "person",

"type": "vertex",

"properties": {

"name": [

{

"id": "name",

"value": "josh"

}

],

"age": [

{

"id": "age",

"value": 32

}

]

}

},

...

]

}

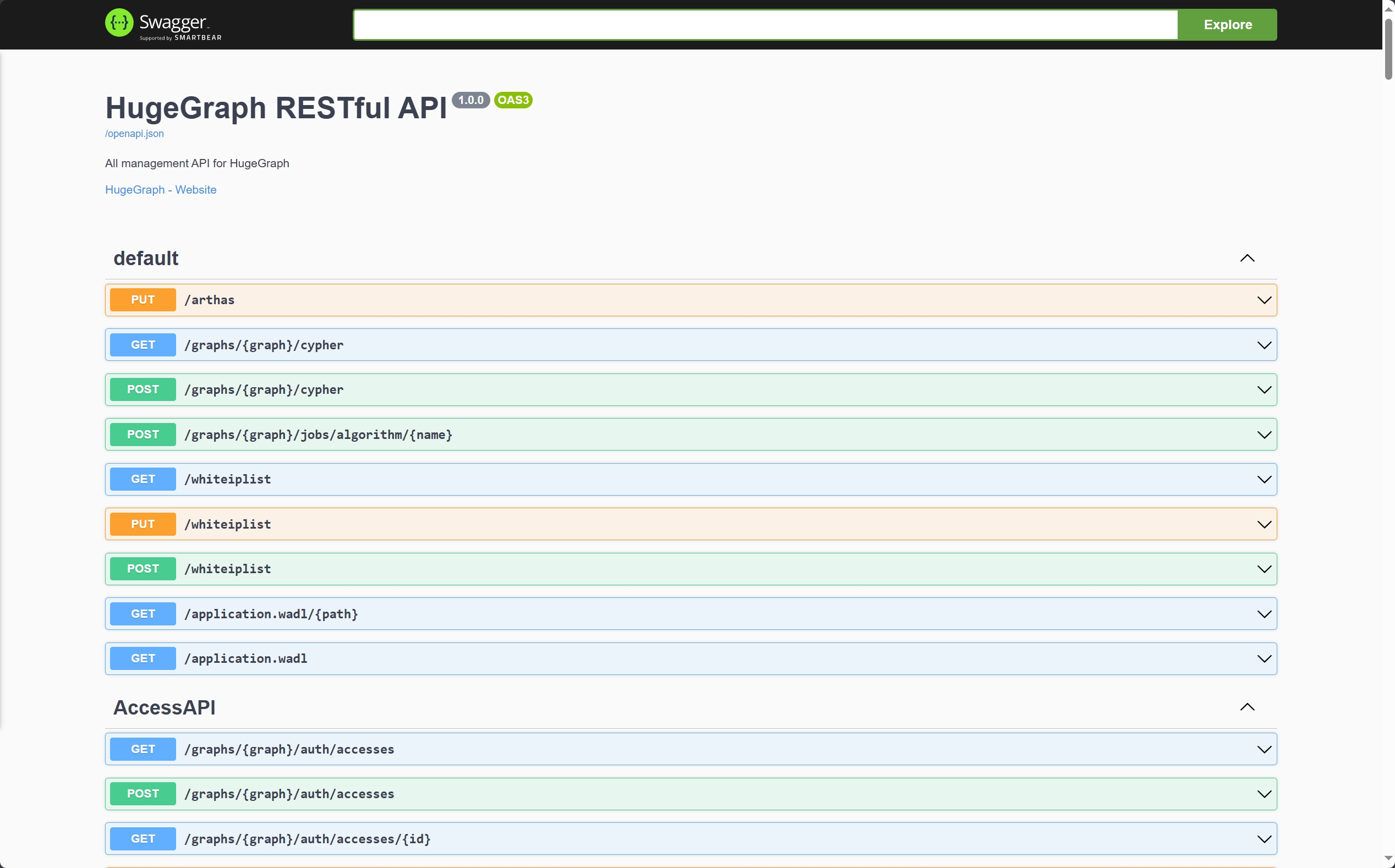

For the detailed API, please refer to RESTful-API

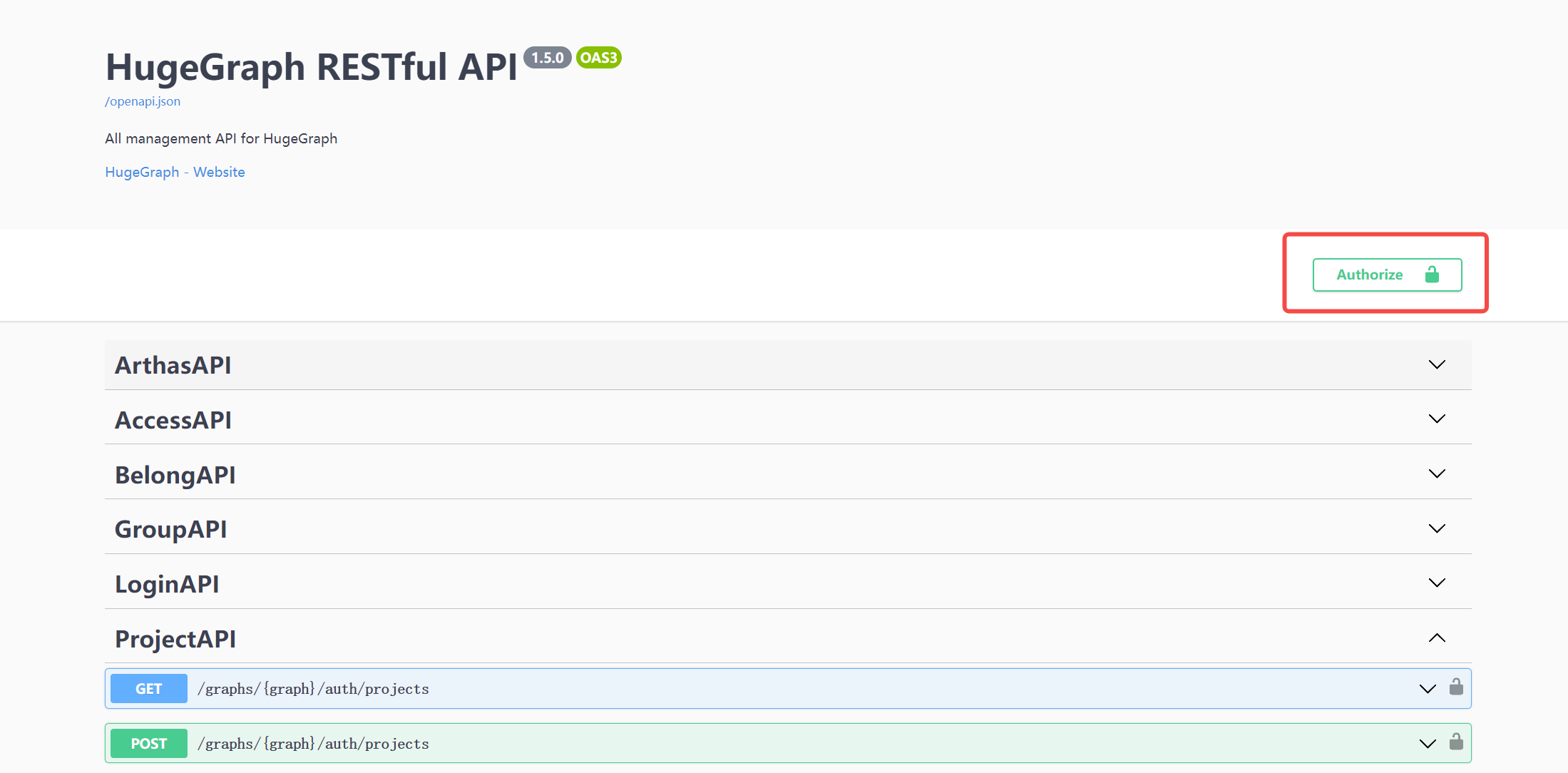

You can also visit localhost:8080/swagger-ui/index.html to check the API.

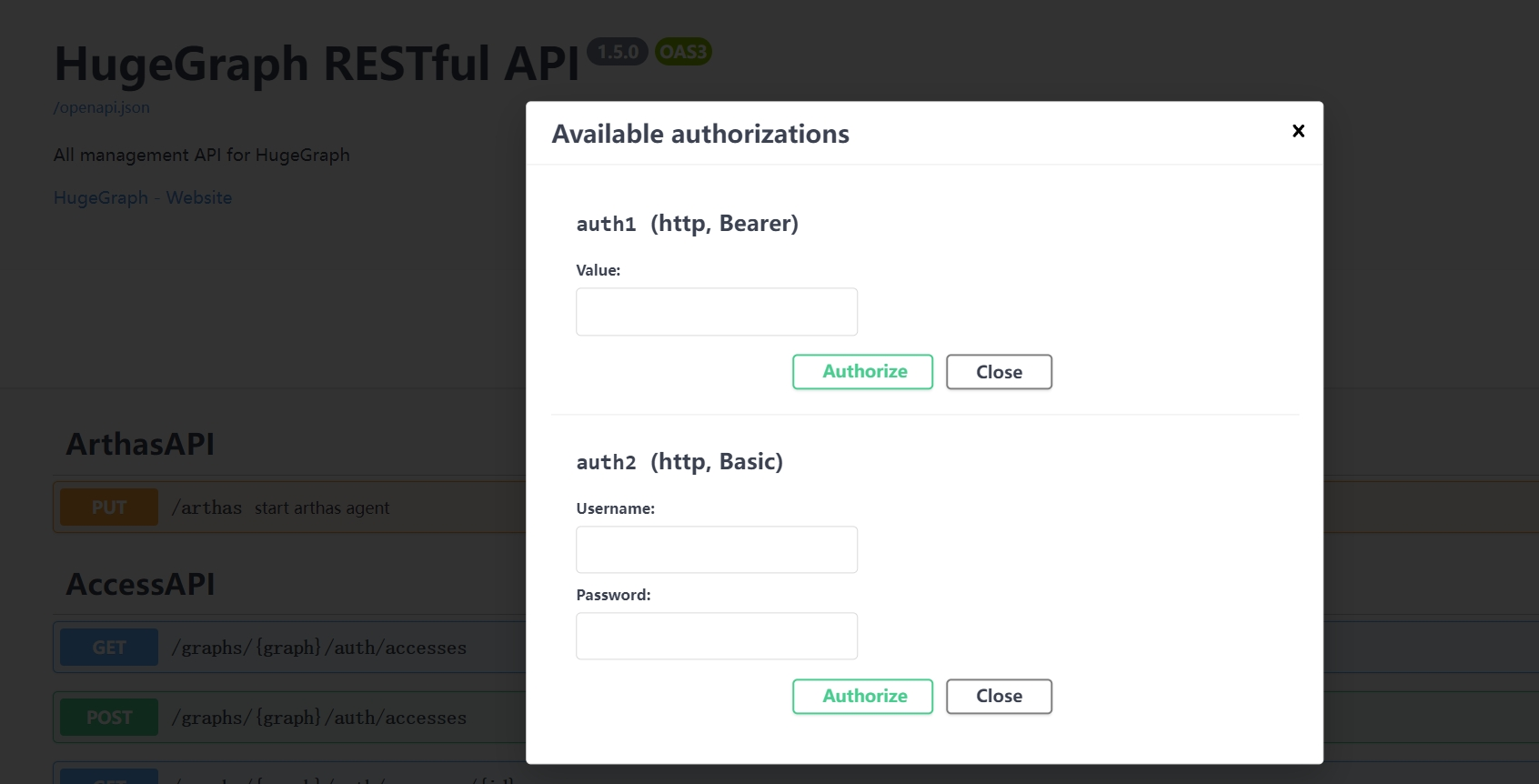

When using Swagger UI to debug the API provided by HugeGraph, if HugeGraph Server turns on authentication mode, you can enter authentication information on the Swagger page.

Currently, HugeGraph supports setting authentication information in two forms: Basic and Bearer.

7 Stop Server

$cd *hugegraph-${version}

$bin/stop-hugegraph.sh

8 Debug Server with IntelliJ IDEA

Please refer to Setup Server in IDEA

3.1.2 - HugeGraph-PD Quick Start

1 HugeGraph-PD Overview

HugeGraph-PD (Placement Driver) is the metadata management component of HugeGraph’s distributed version, responsible for managing the distribution of graph data and coordinating storage nodes. It plays a central role in distributed HugeGraph, maintaining cluster status and coordinating HugeGraph-Store storage nodes.

2 Prerequisites

2.1 Requirements

- Operating System: Linux or MacOS (Windows has not been fully tested)

- Java version: ≥ 11

- Maven version: ≥ 3.5.0

3 Deployment

There are two ways to deploy the HugeGraph-PD component:

- Method 1: Download the tar package

- Method 2: Compile from source

3.1 Download the tar package

Download the latest version of HugeGraph-PD from the Apache HugeGraph official download page:

# Replace {version} with the latest version number, e.g., 1.5.0

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}.tar.gz

tar zxf apache-hugegraph-incubating-{version}.tar.gz

cd apache-hugegraph-incubating-{version}/apache-hugegraph-pd-incubating-{version}

3.2 Compile from source

# 1. Clone the source code

git clone https://github.com/apache/hugegraph.git

# 2. Build the project

cd hugegraph

mvn clean install -DskipTests=true

# 3. After successful compilation, the PD module build artifacts will be located at

# apache-hugegraph-incubating-{version}/apache-hugegraph-pd-incubating-{version}

# target/apache-hugegraph-incubating-{version}.tar.gz

4 Configuration

The main configuration file for PD is conf/application.yml. Here are the key configuration items:

spring:

application:

name: hugegraph-pd

grpc:

# gRPC port for cluster mode

port: 8686

host: 127.0.0.1

server:

# REST service port

port: 8620

pd:

# Storage path

data-path: ./pd_data

# Auto-expansion check cycle (seconds)

patrol-interval: 1800

# Initial store list, stores in the list are automatically activated

initial-store-count: 1

# Store configuration information, format is IP:gRPC port

initial-store-list: 127.0.0.1:8500

raft:

# Cluster mode

address: 127.0.0.1:8610

# Raft addresses of all PD nodes in the cluster

peers-list: 127.0.0.1:8610

store:

# Store offline time (seconds). After this time, the store is considered permanently unavailable

max-down-time: 172800

# Whether to enable store monitoring data storage

monitor_data_enabled: true

# Monitoring data interval

monitor_data_interval: 1 minute

# Monitoring data retention time

monitor_data_retention: 1 day

initial-store-count: 1

partition:

# Default number of replicas per partition

default-shard-count: 1

# Default maximum number of replicas per machine

store-max-shard-count: 12

For multi-node deployment, you need to modify the port and address configurations for each node to ensure proper communication between nodes.

5 Start and Stop

5.1 Start PD

In the PD installation directory, execute:

./bin/start-hugegraph-pd.sh

After successful startup, you can see logs similar to the following in logs/hugegraph-pd-stdout.log:

2024-xx-xx xx:xx:xx [main] [INFO] o.a.h.p.b.HugePDServer - Started HugePDServer in x.xxx seconds (JVM running for x.xxx)

5.2 Stop PD

In the PD installation directory, execute:

./bin/stop-hugegraph-pd.sh

6 Verification

Confirm that the PD service is running properly:

curl http://localhost:8620/actuator/health

If it returns {"status":"UP"}, it indicates that the PD service has been successfully started.

Additionally, you can verify the status of the Store node by querying the PD API:

curl http://localhost:8620/v1/stores

If the Store is configured successfully, the response of the above interface should contain the status information of the current node. The status “Up” indicates that the node is running normally. Only the response of one node configuration is shown here. If all three nodes are configured successfully and are running, the storeId list in the response should contain three IDs, and the Up, numOfService, and numOfNormalService fields in stateCountMap should be 3.

{

"message": "OK",

"data": {

"stores": [

{

"storeId": 8319292642220586694,

"address": "127.0.0.1:8500",

"raftAddress": "127.0.0.1:8510",

"version": "",

"state": "Up",

"deployPath": "/Users/{your_user_name}/hugegraph/apache-hugegraph-incubating-1.5.0/apache-hugegraph-store-incubating-1.5.0/lib/hg-store-node-1.5.0.jar",

"dataPath": "./storage",

"startTimeStamp": 1754027127969,

"registedTimeStamp": 1754027127969,

"lastHeartBeat": 1754027909444,

"capacity": 494384795648,

"available": 346535829504,

"partitionCount": 0,

"graphSize": 0,

"keyCount": 0,

"leaderCount": 0,

"serviceName": "127.0.0.1:8500-store",

"serviceVersion": "",

"serviceCreatedTimeStamp": 1754027127000,

"partitions": []

}

],

"stateCountMap": {

"Up": 1

},

"numOfService": 1,

"numOfNormalService": 1

},

"status": 0

}

3.1.3 - HugeGraph-Store Quick Start

1 HugeGraph-Store Overview

HugeGraph-Store is the storage node component of HugeGraph’s distributed version, responsible for actually storing and managing graph data. It works in conjunction with HugeGraph-PD to form HugeGraph’s distributed storage engine, providing high availability and horizontal scalability.

2 Prerequisites

2.1 Requirements

- Operating System: Linux or MacOS (Windows has not been fully tested)

- Java version: ≥ 11

- Maven version: ≥ 3.5.0

- Deployed HugeGraph-PD (for multi-node deployment)

3 Deployment

There are two ways to deploy the HugeGraph-Store component:

- Method 1: Download the tar package

- Method 2: Compile from source

3.1 Download the tar package

Download the latest version of HugeGraph-Store from the Apache HugeGraph official download page:

# Replace {version} with the latest version number, e.g., 1.5.0

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}.tar.gz

tar zxf apache-hugegraph-incubating-{version}.tar.gz

cd apache-hugegraph-incubating-{version}/apache-hugegraph-hstore-incubating-{version}

3.2 Compile from source

# 1. Clone the source code

git clone https://github.com/apache/hugegraph.git

# 2. Build the project

cd hugegraph

mvn clean install -DskipTests=true

# 3. After successful compilation, the Store module build artifacts will be located at

# apache-hugegraph-incubating-{version}/apache-hugegraph-hstore-incubating-{version}

# target/apache-hugegraph-incubating-{version}.tar.gz

4 Configuration

The main configuration file for Store is conf/application.yml. Here are the key configuration items:

pdserver:

# PD service address, multiple PD addresses are separated by commas (configure PD's gRPC port)

address: 127.0.0.1:8686

grpc:

# gRPC service address

host: 127.0.0.1

port: 8500

netty-server:

max-inbound-message-size: 1000MB

raft:

# raft cache queue size

disruptorBufferSize: 1024

address: 127.0.0.1:8510

max-log-file-size: 600000000000

# Snapshot generation time interval, in seconds

snapshotInterval: 1800

server:

# REST service address

port: 8520

app:

# Storage path, supports multiple paths separated by commas

data-path: ./storage

#raft-path: ./storage

spring:

application:

name: store-node-grpc-server

profiles:

active: default

include: pd

logging:

config: 'file:./conf/log4j2.xml'

level:

root: info

For multi-node deployment, you need to modify the following configurations for each Store node:

grpc.port (RPC port) for each noderaft.address (Raft protocol port) for each nodeserver.port (REST port) for each nodeapp.data-path (data storage path) for each node

5 Start and Stop

5.1 Start Store

Ensure that the PD service is already started, then in the Store installation directory, execute:

./bin/start-hugegraph-store.sh

After successful startup, you can see logs similar to the following in logs/hugegraph-store-server.log:

2024-xx-xx xx:xx:xx [main] [INFO] o.a.h.s.n.StoreNodeApplication - Started StoreNodeApplication in x.xxx seconds (JVM running for x.xxx)

5.2 Stop Store

In the Store installation directory, execute:

./bin/stop-hugegraph-store.sh

6 Multi-Node Deployment Example

Below is a configuration example for a three-node deployment:

6.1 Three-Node Configuration Reference

- 3 PD nodes

- raft ports: 8610, 8611, 8612

- rpc ports: 8686, 8687, 8688

- rest ports: 8620, 8621, 8622

- 3 Store nodes

- raft ports: 8510, 8511, 8512

- rpc ports: 8500, 8501, 8502

- rest ports: 8520, 8521, 8522

6.2 Store Node Configuration

For the three Store nodes, the main configuration differences are as follows:

Node A:

grpc:

port: 8500

raft:

address: 127.0.0.1:8510

server:

port: 8520

app:

data-path: ./storage-a

Node B:

grpc:

port: 8501

raft:

address: 127.0.0.1:8511

server:

port: 8521

app:

data-path: ./storage-b

Node C:

grpc:

port: 8502

raft:

address: 127.0.0.1:8512

server:

port: 8522

app:

data-path: ./storage-c

All nodes should point to the same PD cluster:

pdserver:

address: 127.0.0.1:8686,127.0.0.1:8687,127.0.0.1:8688

7 Verify Store Service

Confirm that the Store service is running properly:

curl http://localhost:8520/actuator/health

If it returns {"status":"UP"}, it indicates that the Store service has been successfully started.

Additionally, you can check the status of Store nodes in the cluster through the PD API:

curl http://localhost:8620/pd/api/v1/stores

3.2 - HugeGraph ToolChain

Testing Guide: For running toolchain tests locally, please refer to HugeGraph Toolchain Local Testing Guide

DeepWiki provides real-time updated project documentation with more comprehensive and accurate content, suitable for quickly understanding the latest project information.

📖 https://deepwiki.com/apache/hugegraph-toolchain

GitHub Access: https://github.com/apache/hugegraph-toolchain

3.2.1 - HugeGraph-Hubble Quick Start

1 HugeGraph-Hubble Overview

Note: The current version of Hubble has not yet added Auth/Login related interfaces and

standalone protection, it will be added in the next Release version (> 1.5).

Please be careful not to expose it in a public network environment or untrusted networks to

avoid related SEC issues (you can also use IP & port whitelist + HTTPS)

Testing Guide: For running HugeGraph-Hubble tests locally, please refer to HugeGraph Toolchain Local Testing Guide

HugeGraph-Hubble is HugeGraph’s one-stop visual analysis platform. The platform covers the whole

process from data modeling, to efficient data import, to real-time and offline analysis of data, and

unified management of graphs, realizing the whole process wizard of graph application. It is designed

to improve the user’s use fluency, lower the user’s use threshold, and provide a more efficient and easy-to-use user experience.

The platform mainly includes the following modules:

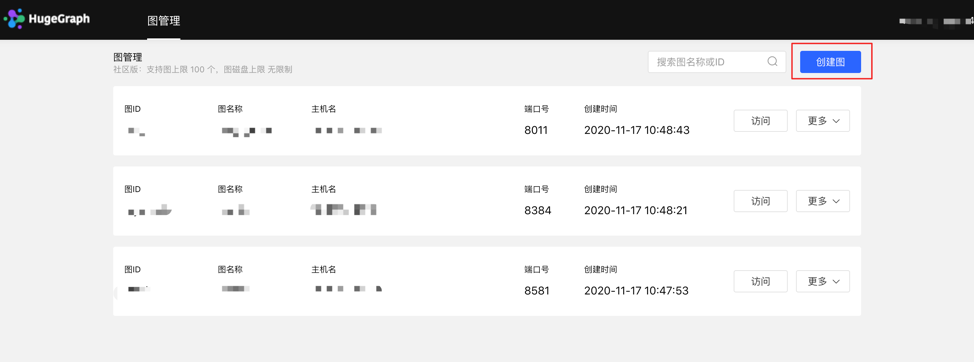

Graph Management

The graph management module realizes the unified management of multiple graphs and graph access, editing, deletion, and query by creating graph and connecting the platform and graph data.

The metadata modeling module realizes the construction and management of graph models by creating attribute libraries, vertex types, edge types, and index types. The platform provides two modes, list mode and graph mode, which can display the metadata model in real time, which is more intuitive. At the same time, it also provides a metadata reuse function across graphs, which saves the tedious and repetitive creation process of the same metadata, greatly improves modeling efficiency and enhances ease of use.

Graph Analysis

By inputting the graph traversal language Gremlin, high-performance general analysis of graph data can be realized, and functions such as customized multidimensional path query of vertices can be provided, and three kinds of graph result display methods are provided, including: graph form, table form, Json form, and multidimensional display. The data form meets the needs of various scenarios used by users. It provides functions such as running records and collection of common statements, realizing the traceability of graph operations, and the reuse and sharing of query input, which is fast and efficient. It supports the export of graph data, and the export format is JSON format.

Task Management

For Gremlin tasks that need to traverse the whole graph, index creation and reconstruction,

and other time-consuming asynchronous tasks, the platform provides corresponding task management

functions to achieve unified management and result viewing of asynchronous tasks.

Data Import

“Note: The data import function is currently suitable for preliminary use. For formal data import,

please use hugegraph-loader, which has much better performance, stability, and functionality.”

Data import is to convert the user’s business data into the vertices and edges of the graph and

insert it into the graph database. The platform provides a wizard-style visual import module.

By creating import tasks, the management of import tasks and the parallel operation of multiple

import tasks are realized. Improve import performance. After entering the import task, you only

need to follow the platform step prompts, upload files as needed, and fill in the content to easily

implement the import process of graph data. At the same time, it supports breakpoint resuming,

error retry mechanism, etc., which reduces import costs and improves efficiency.

2 Deploy

There are three ways to deploy hugegraph-hubble

- Use Docker (Convenient for Test/Dev)

- Download the Toolchain binary package

- Source code compilation

2.1 Use docker (Convenient for Test/Dev)

Special Note: If you are starting hubble with Docker, and hubble and the server are on the same host. When configuring the hostname for the graph on the Hubble web page, please do not directly set it to localhost/127.0.0.1. This will refer to the hubble container internally rather than the host machine, resulting in a connection failure to the server.

If hubble and server is in the same docker network, we recommend using the container_name (in our example, it is server) as the hostname, and 8080 as the port. Or you can use the host IP as the hostname, and the port is configured by the host for the server.

We can use docker run -itd --name=hubble -p 8088:8088 hugegraph/hubble:1.5.0 to quick start hubble.

Alternatively, you can use Docker Compose to start hubble. Additionally, if hubble and the graph is in the same Docker network, you can access the graph using the container name of the graph, eliminating the need for the host machine’s IP address.

Use docker-compose up -d,docker-compose.yml is following:

version: '3'

services:

server:

image: hugegraph/hugegraph:1.5.0

container_name: server

environment:

- PASSWORD=xxx

ports:

- 8080:8080

hubble:

image: hugegraph/hubble:1.5.0

container_name: hubble

ports:

- 8088:8088

Note:

The docker image of hugegraph-hubble is a convenience release to start hugegraph-hubble quickly, but not official distribution artifacts. You can find more details from ASF Release Distribution Policy.

Recommend to use release tag(like 1.5.0) for the stable version. Use latest tag to experience the newest functions in development.

hubble is in the toolchain project. First, download the binary tar tarball

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-toolchain-incubating-{version}.tar.gz

tar -xvf apache-hugegraph-toolchain-incubating-{version}.tar.gz

cd apache-hugegraph-toolchain-incubating-{version}.tar.gz/apache-hugegraph-hubble-incubating-{version}

Run hubble

Then, we can see:

starting HugeGraphHubble ..............timed out with http status 502

2023-08-30 20:38:34 [main] [INFO ] o.a.h.HugeGraphHubble [] - Starting HugeGraphHubble v1.0.0 on cpu05 with PID xxx (~/apache-hugegraph-toolchain-incubating-1.0.0/apache-hugegraph-hubble-incubating-1.0.0/lib/hubble-be-1.0.0.jar started by $USER in ~/apache-hugegraph-toolchain-incubating-1.0.0/apache-hugegraph-hubble-incubating-1.0.0)

...

2023-08-30 20:38:38 [main] [INFO ] c.z.h.HikariDataSource [] - hugegraph-hubble-HikariCP - Start completed.

2023-08-30 20:38:41 [main] [INFO ] o.a.c.h.Http11NioProtocol [] - Starting ProtocolHandler ["http-nio-0.0.0.0-8088"]

2023-08-30 20:38:41 [main] [INFO ] o.a.h.HugeGraphHubble [] - Started HugeGraphHubble in 7.379 seconds (JVM running for 8.499)

Then use a web browser to access ip:8088 and you can see the Hubble page. You can stop the service using bin/stop-hubble.sh.

2.3 Source code compilation

Note: The plugin frontend-maven-plugin has been added to hugegraph-hubble/hubble-be/pom.xml. To compile hubble, you do not need to install Nodejs V16.x and yarn environment in your local environment in advance. You can directly execute the following steps.

Download the toolchain source code.

git clone https://github.com/apache/hugegraph-toolchain.git

Compile hubble. It depends on the loader and client, so you need to build these dependencies in advance during the compilation process (you can skip this step later).

cd hugegraph-toolchain

sudo pip install -r hugegraph-hubble/hubble-dist/assembly/travis/requirements.txt

mvn install -pl hugegraph-client,hugegraph-loader -am -Dmaven.javadoc.skip=true -DskipTests -ntp

cd hugegraph-hubble

mvn -e compile package -Dmaven.javadoc.skip=true -Dmaven.test.skip=true -ntp

cd apache-hugegraph-hubble-incubating*

Run hubble

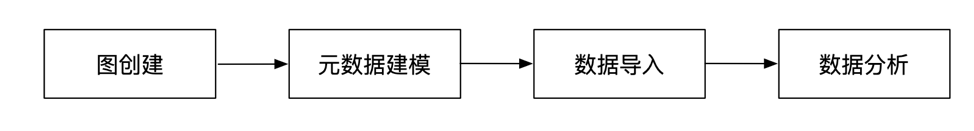

The module usage process of the platform is as follows:

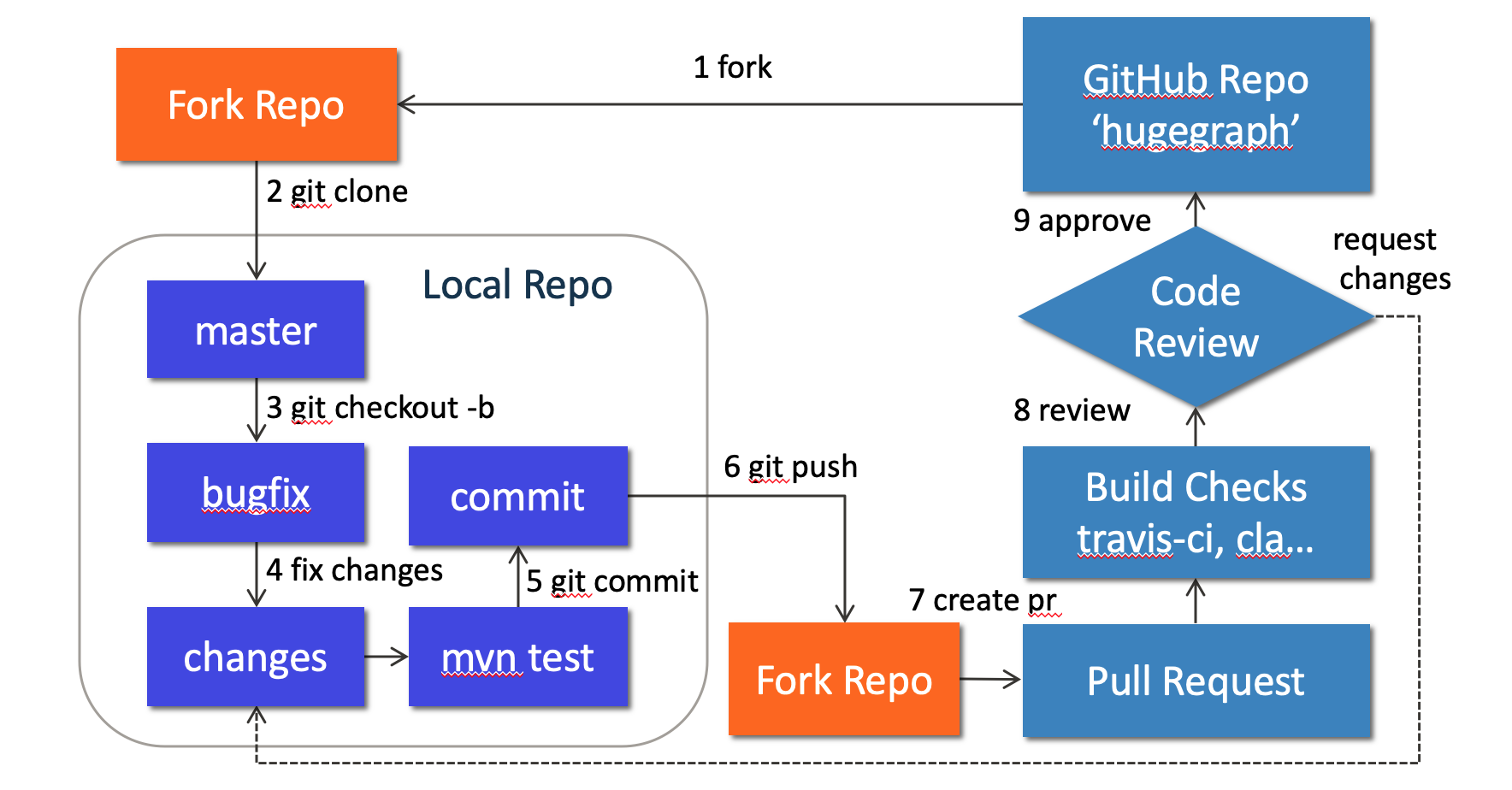

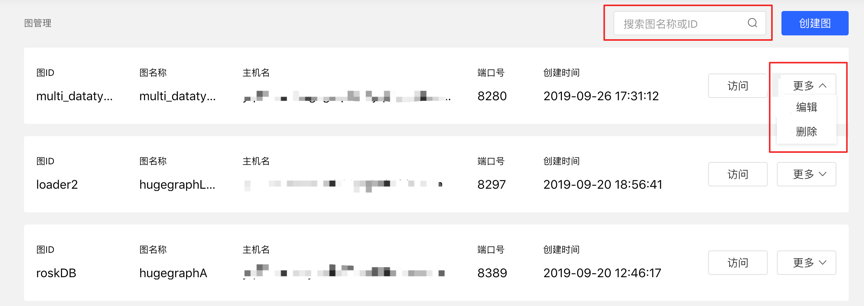

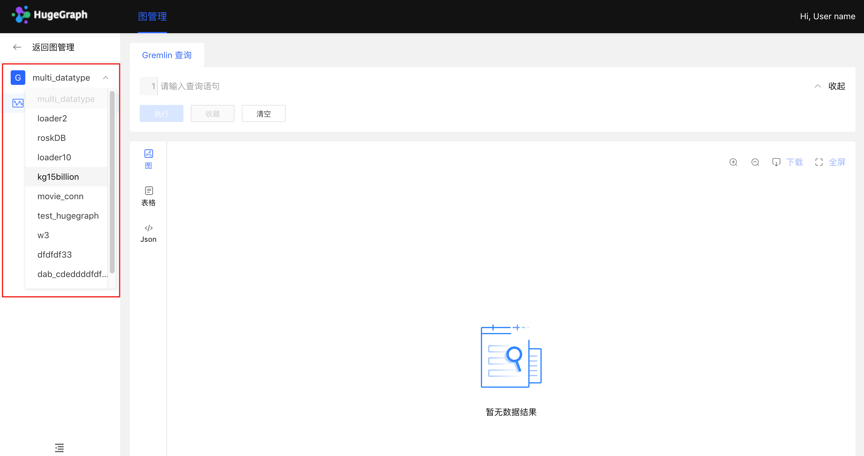

4.1 Graph Management

4.1.1 Graph creation

Under the graph management module, click [Create graph], and realize the connection of multiple graphs by filling in the graph ID, graph name, host name, port number, username, and password information.

Create graph by filling in the content as follows:

Special Note: If you are starting hubble with Docker, and hubble and the server are on the same host. When configuring the hostname for the graph on the Hubble web page, please do not directly set it to localhost/127.0.0.1. If hubble and server is in the same docker network, we recommend using the container_name (in our example, it is graph) as the hostname, and 8080 as the port. Or you can use the host IP as the hostname, and the port is configured by the host for the server.

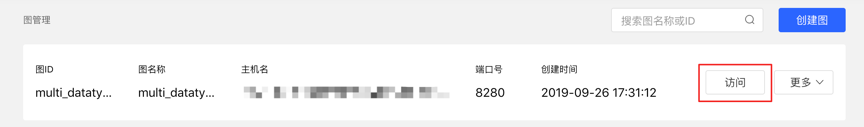

4.1.2 Graph Access

Realize the information access to the graph space. After entering, you can perform operations such as multidimensional query analysis, metadata management, data import, and algorithm analysis of the graph.

4.1.3 Graph management

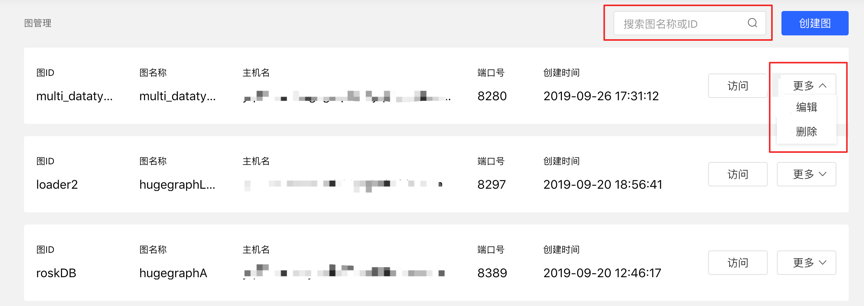

- Users can achieve unified management of graphs through overview, search, and information editing and deletion of single graphs.

- Search range: You can search for the graph name and ID.

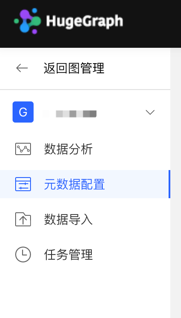

4.2.1 Module entry

Left navigation:

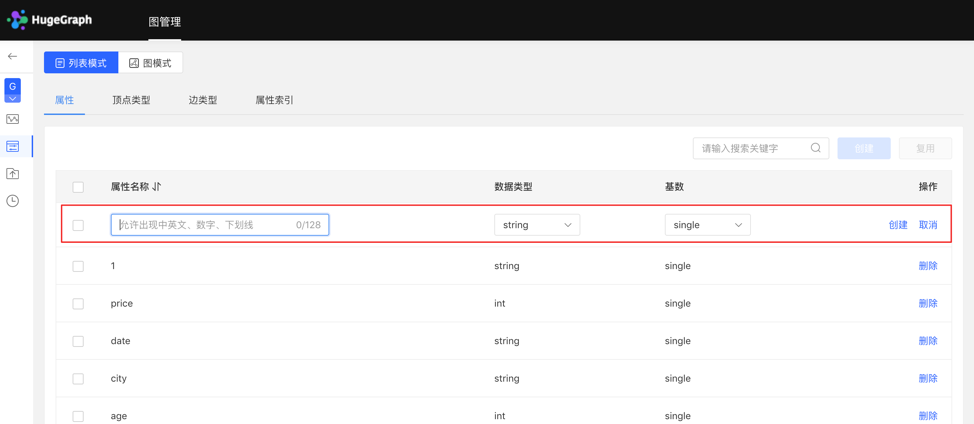

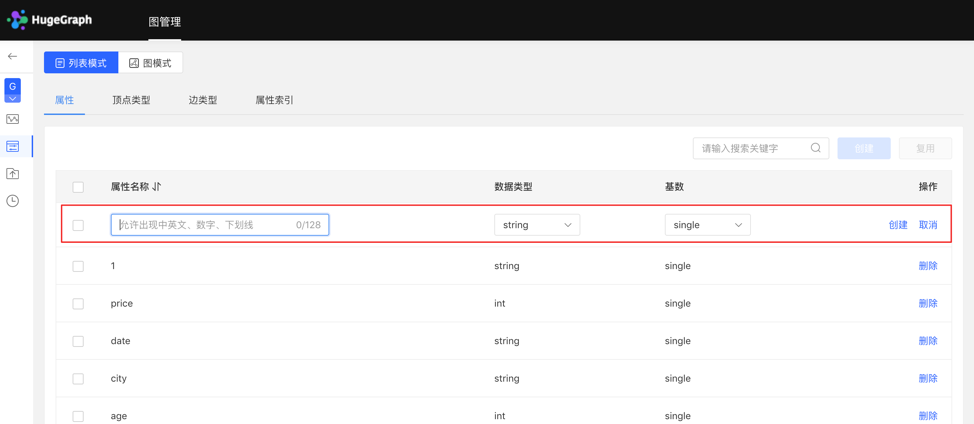

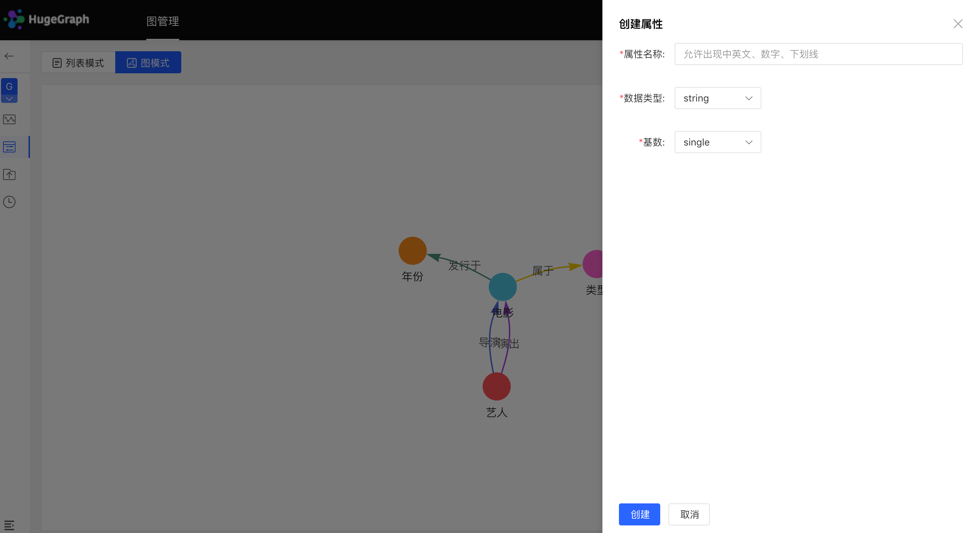

4.2.2 Property type

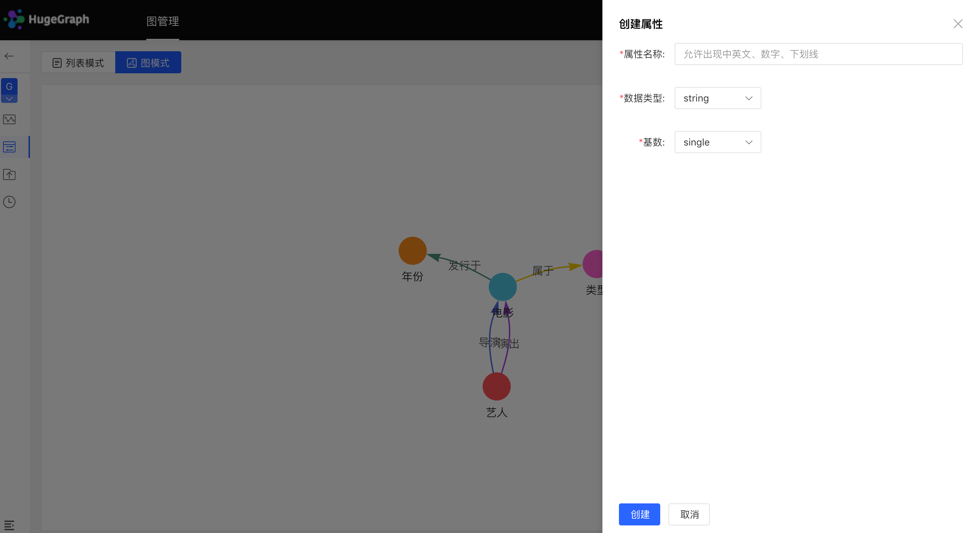

4.2.2.1 Create type

- Fill in or select the attribute name, data type, and cardinality to complete the creation of the attribute.

- Created attributes can be used as attributes of vertex type and edge type.

List mode:

Graph mode:

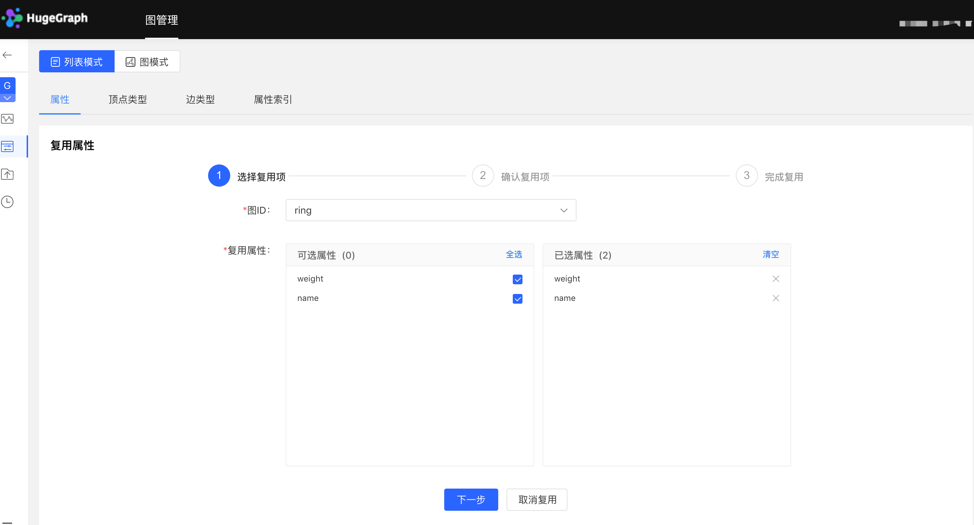

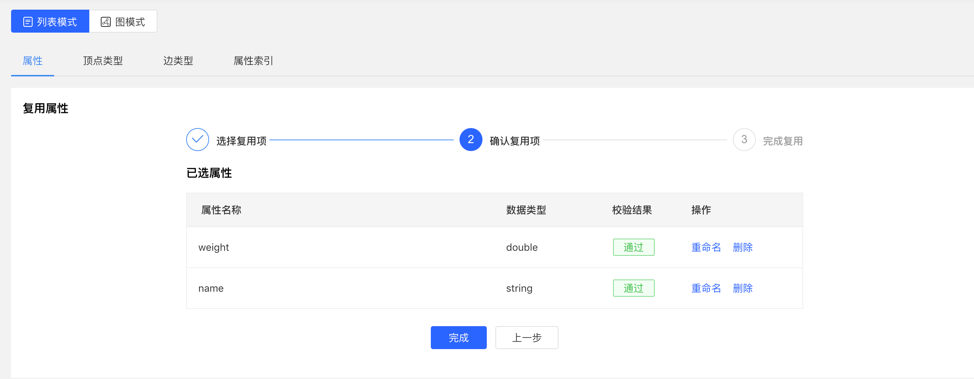

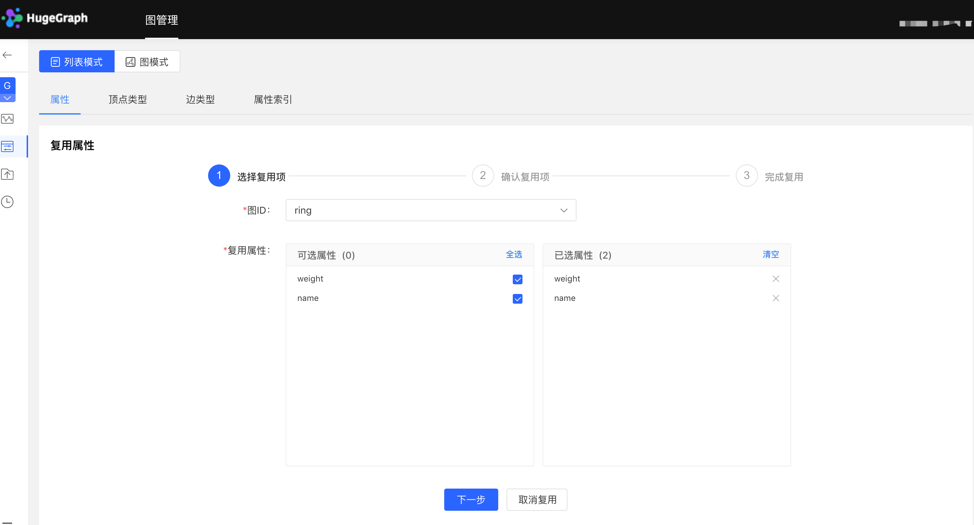

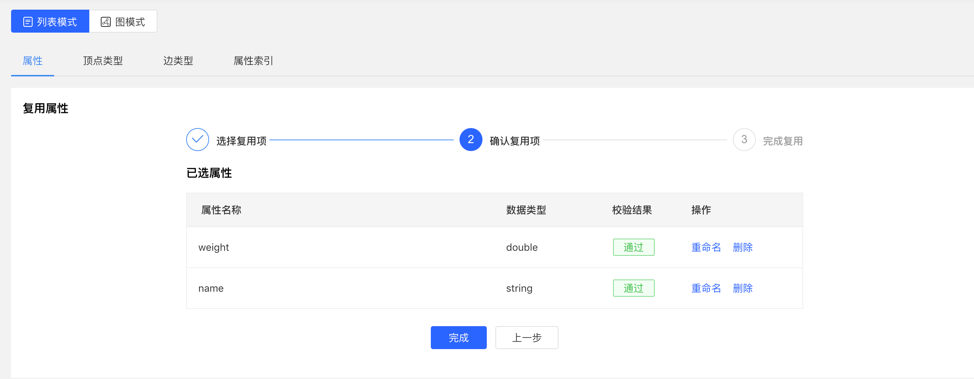

4.2.2.2 Reuse

- The platform provides the [Reuse] function, which can directly reuse the metadata of other graphs.

- Select the graph ID that needs to be reused, and continue to select the attributes that need to be reused. After that, the platform will check whether there is a conflict. After passing, the metadata can be reused.

Select reuse items:

Check reuse items:

4.2.2.3 Management

- You can delete a single item or delete it in batches in the attribute list.

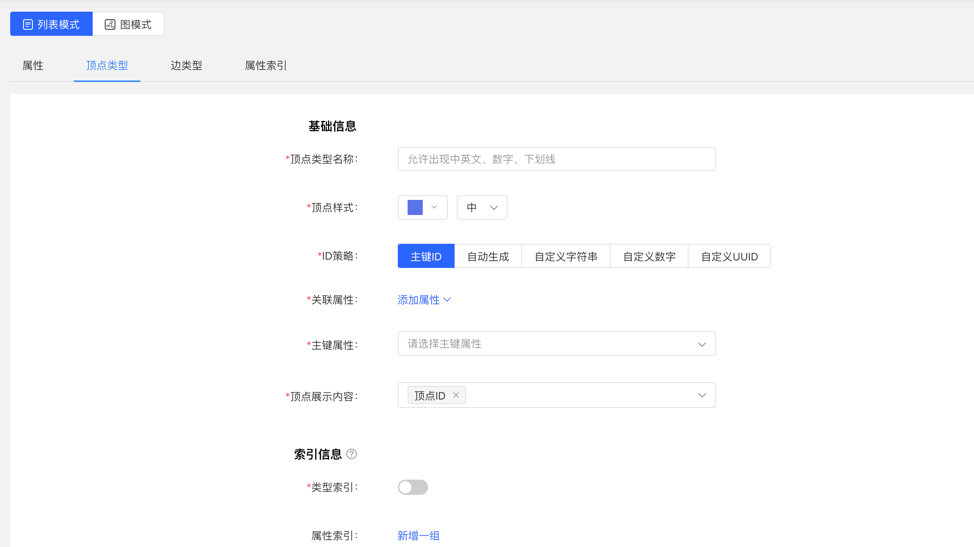

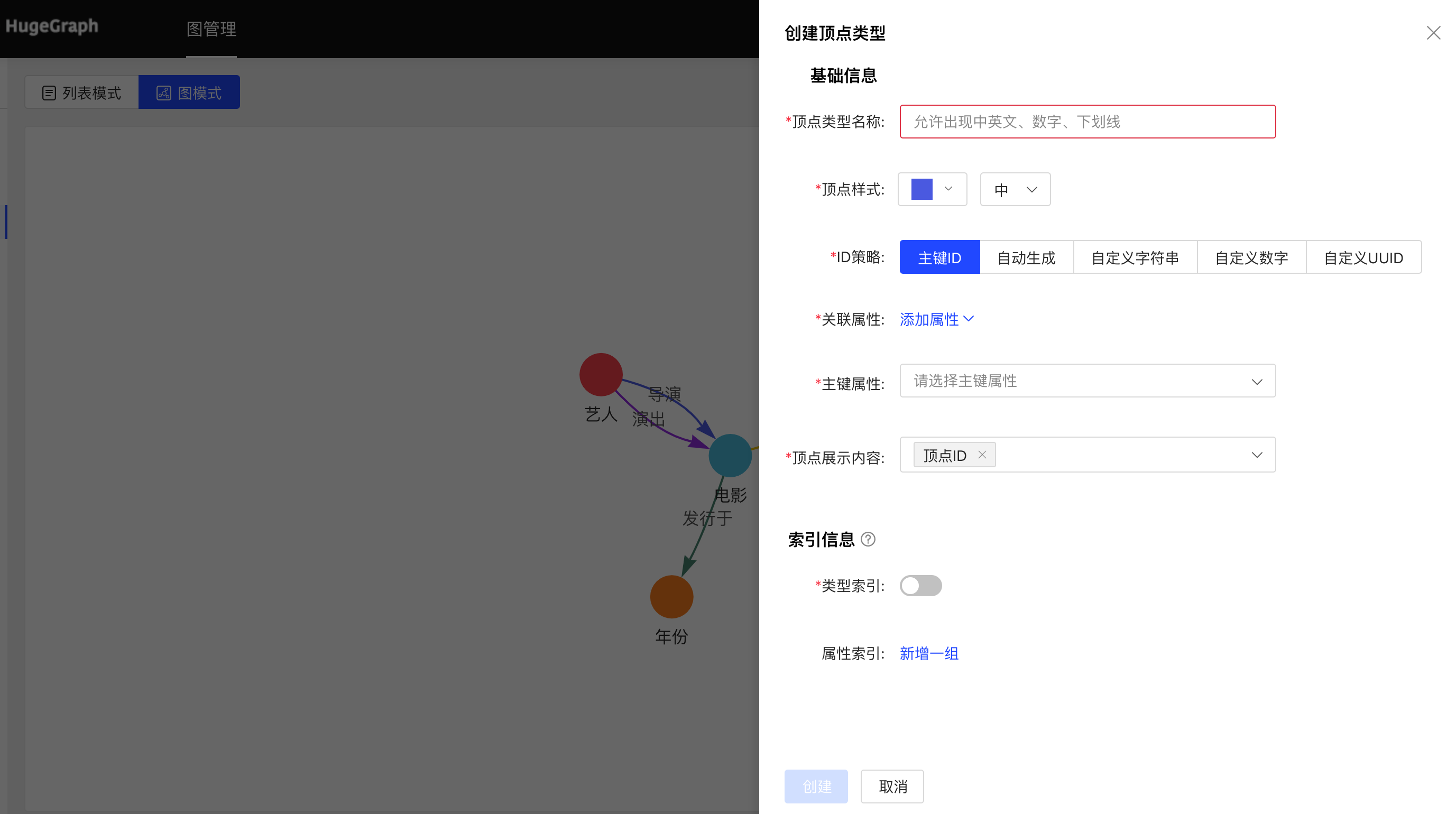

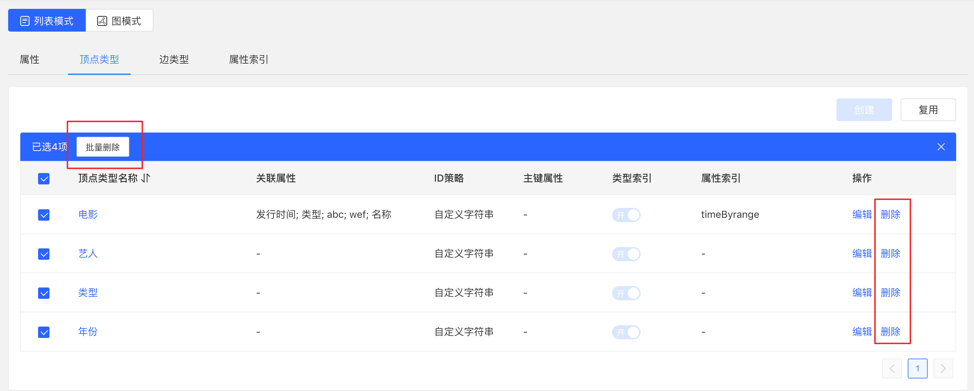

4.2.3 Vertex type

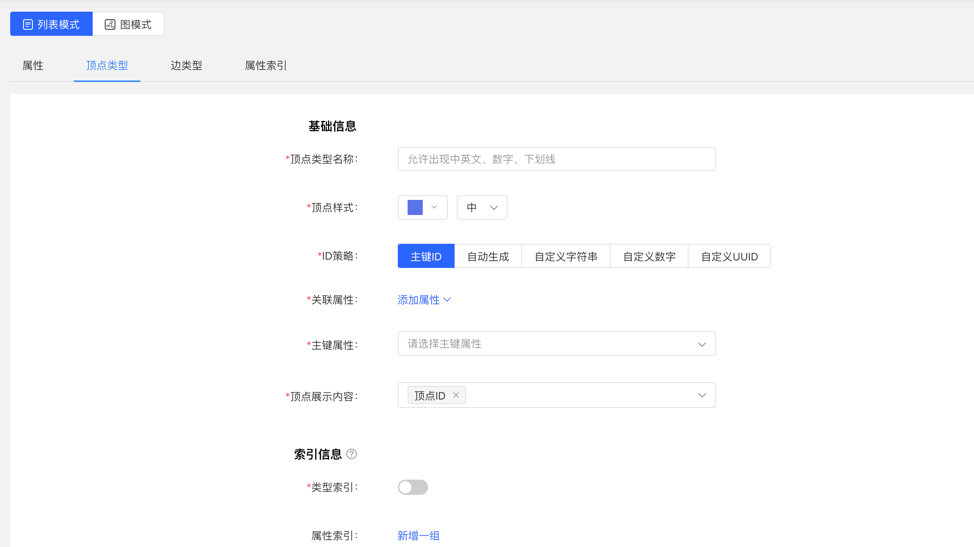

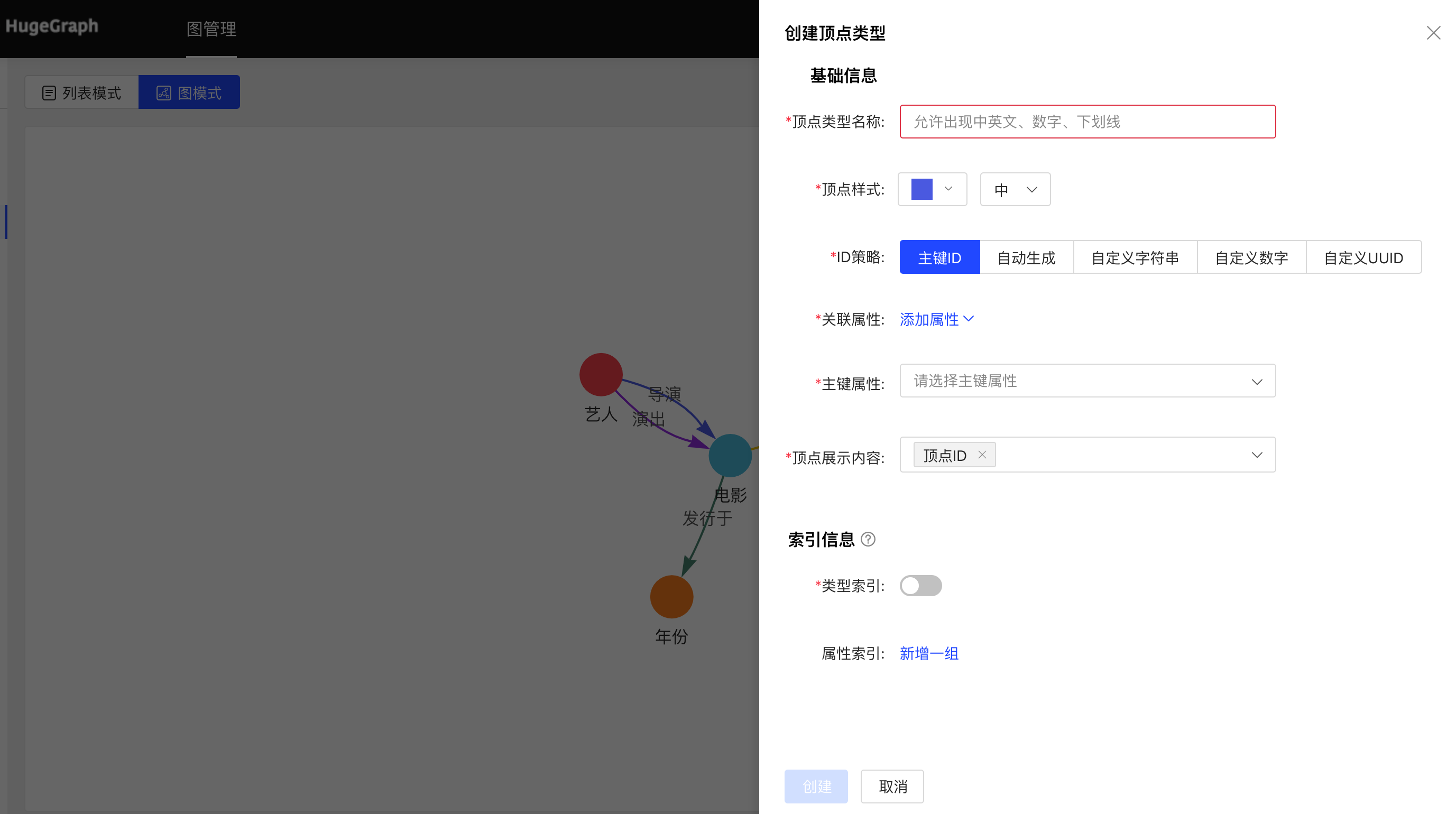

4.2.3.1 Create type

- Fill in or select the vertex type name, ID strategy, association attribute, primary key attribute, vertex style, content displayed below the vertex in the query result, and index information: including whether to create a type index, and the specific content of the attribute index, complete the vertex Type creation.

List mode:

Graph mode:

4.2.3.2 Reuse

- The multiplexing of vertex types will reuse the attributes and attribute indexes associated with this type together.

- The reuse method is similar to the property reuse, see 3.2.2.2.

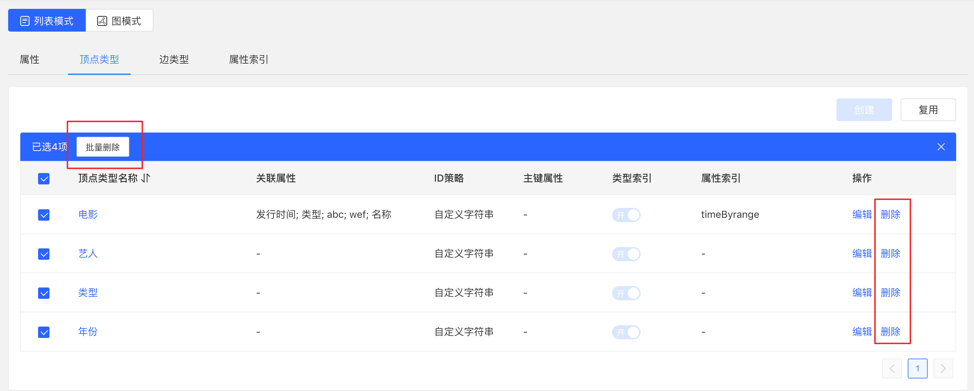

4.2.3.3 Administration

Editing operations are available. The vertex style, association type, vertex display content, and attribute index can be edited, and the rest cannot be edited.

You can delete a single item or delete it in batches.

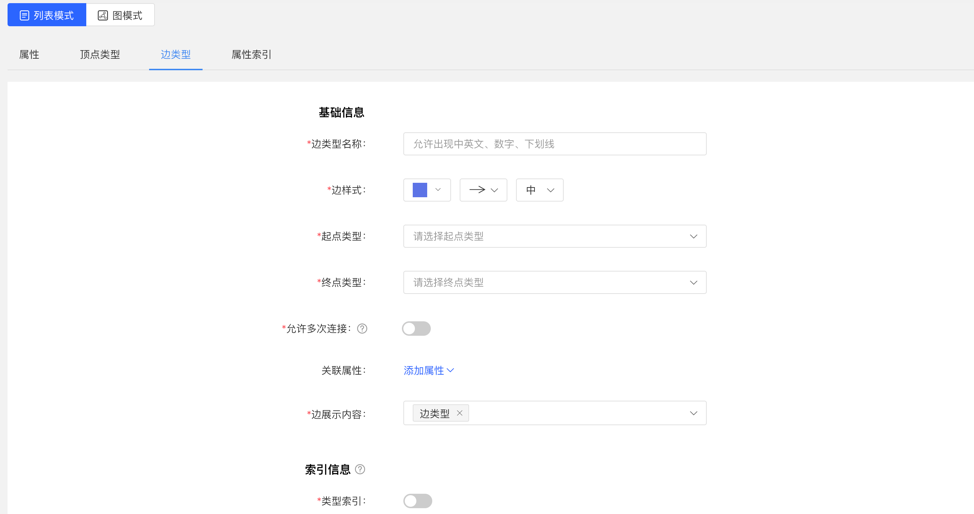

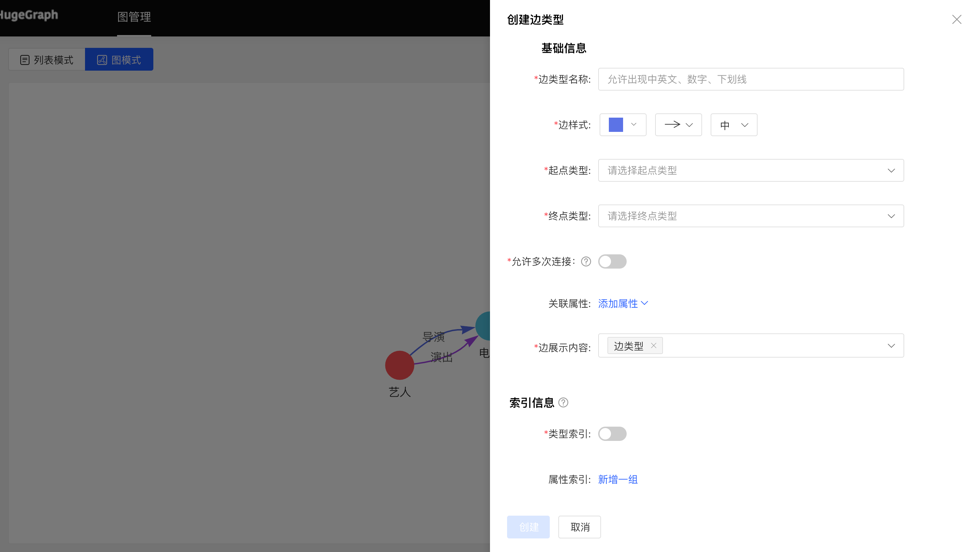

4.2.4 Edge Types

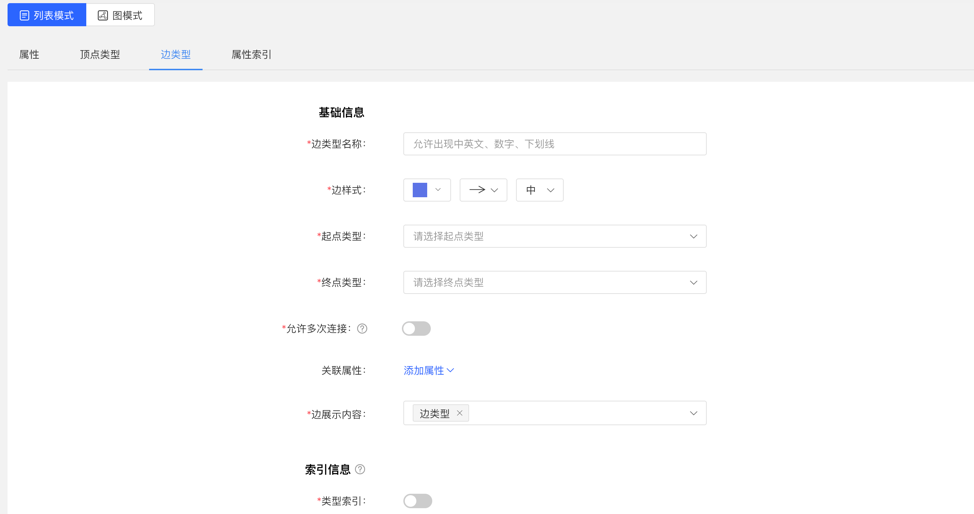

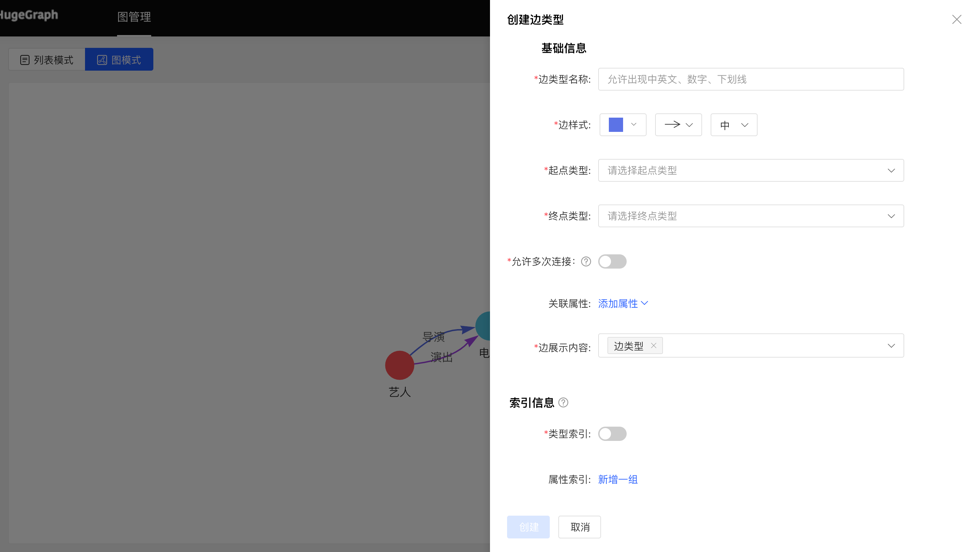

4.2.4.1 Create

- Fill in or select the edge type name, start point type, end point type, associated attributes, whether to allow multiple connections, edge style, content displayed below the edge in the query result, and index information: including whether to create a type index, and attribute index The specific content, complete the creation of the edge type.

List mode:

Graph mode:

4.2.4.2 Reuse

- The reuse of the edge type will reuse the start point type, end point type, associated attribute and attribute index of this type.

- The reuse method is similar to the property reuse, see 3.2.2.2.

4.2.4.3 Administration

- Editing operations are available. Edge styles, associated attributes, edge display content, and attribute indexes can be edited, and the rest cannot be edited, the same as the vertex type.

- You can delete a single item or delete it in batches.

4.2.5 Index Types

Displays vertex and edge indices for vertex types and edge types.

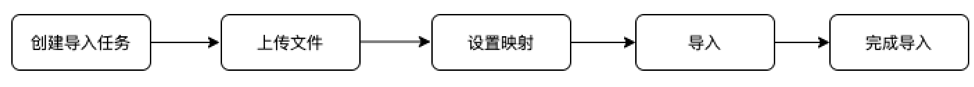

4.3 Data Import

Note:currently, we recommend to use hugegraph-loader to import data formally. The built-in import of hubble is used for testing and getting started.

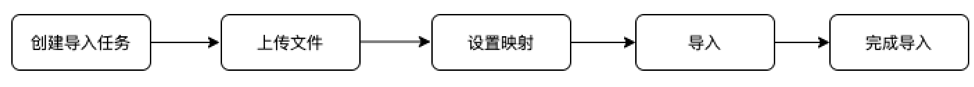

The usage process of data import is as follows:

4.3.1 Module entrance

Left navigation:

4.3.2 Create task

- Fill in the task name and remarks (optional) to create an import task.

- Multiple import tasks can be created and imported in parallel.

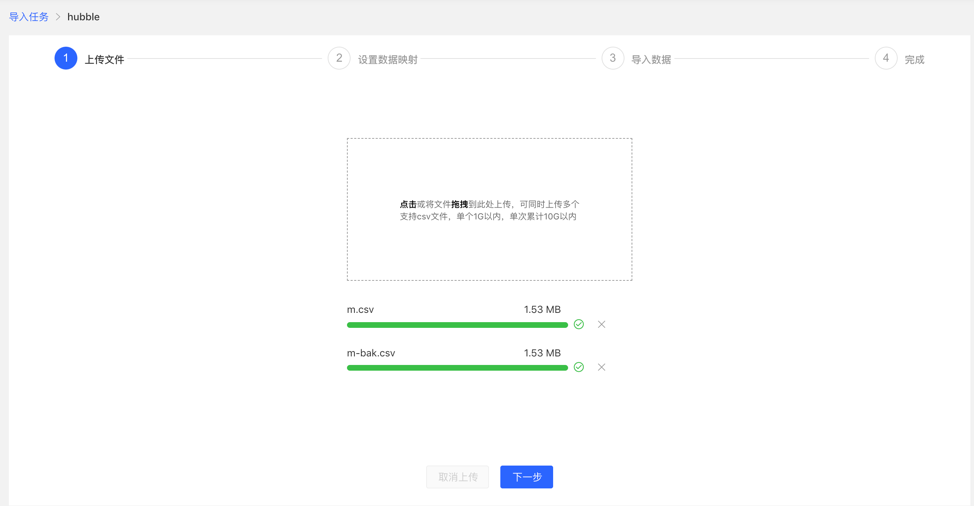

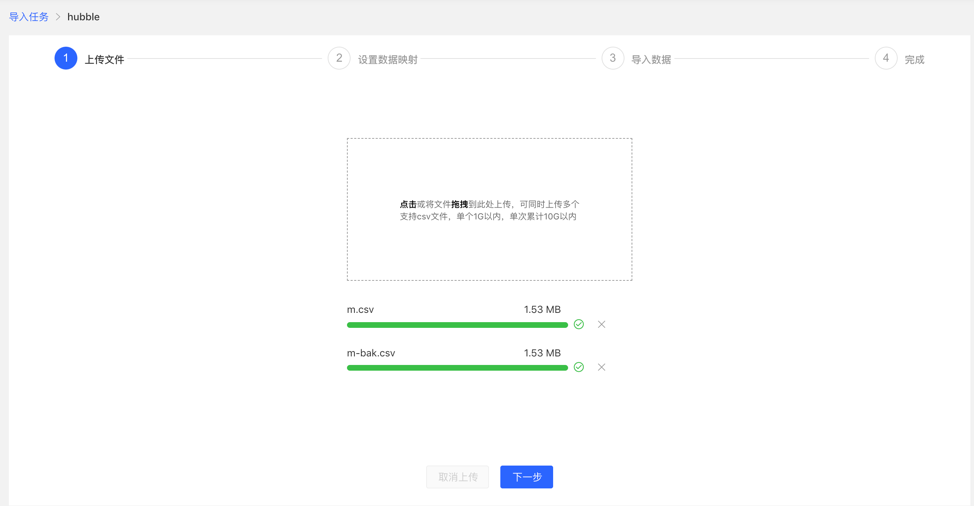

4.3.3 Uploading files

- Upload the file that needs to be composed. The currently supported format is CSV, which will be updated continuously in the future.

- Multiple files can be uploaded at the same time.

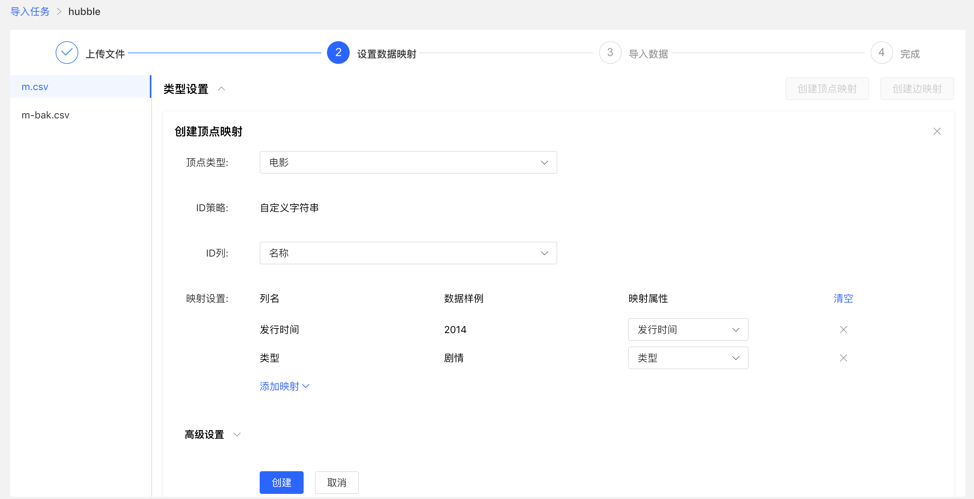

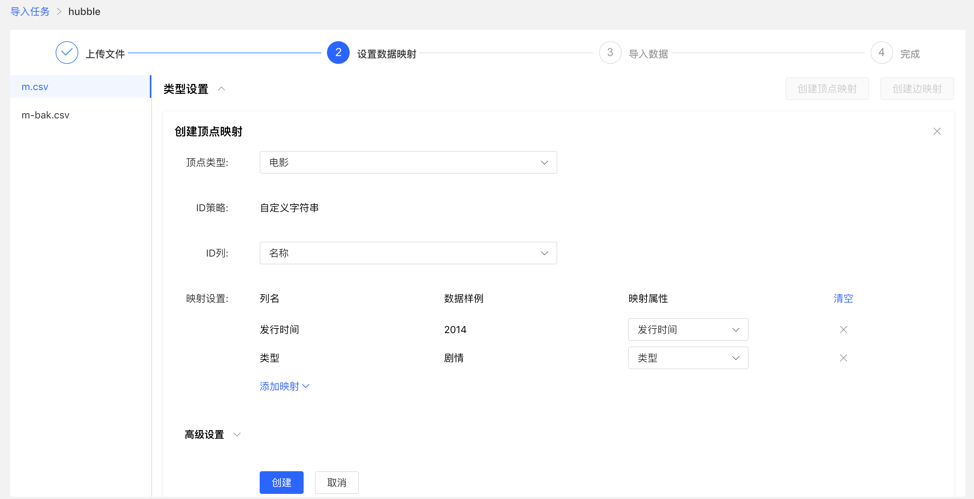

4.3.4 Setting up data mapping

Set up data mapping for uploaded files, including file settings and type settings

File settings: Check or fill in whether to include the header, separator, encoding format and other settings of the file itself, all set the default values, no need to fill in manually

Type setting:

Vertex map and edge map:

【Vertex Type】: Select the vertex type, and upload the column data in the file for its ID mapping;

【Edge Type】: Select the edge type and map the column data of the uploaded file to the ID column of its start point type and end point type;

Mapping settings: upload the column data in the file for the attribute mapping of the selected vertex type. Here, if the attribute name is the same as the header name of the file, the mapping attribute can be automatically matched, and there is no need to manually fill in the selection.

After completing the setting, the setting list will be displayed before proceeding to the next step. It supports the operations of adding, editing and deleting mappings.

Fill in the settings map:

Mapping list:

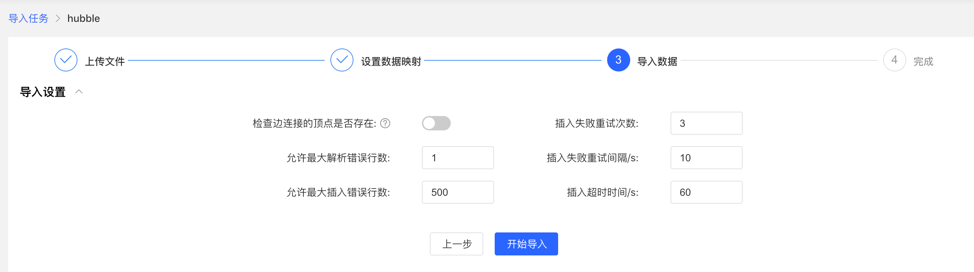

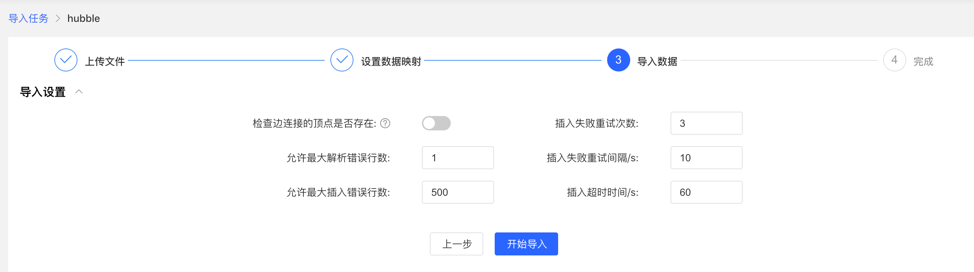

4.3.5 Import data

Before importing, you need to fill in the import setting parameters. After filling in, you can start importing data into the gallery.

- Import settings

- The import setting parameter items are as shown in the figure below, all set the default value, no need to fill in manually

- Import details

- Click Start Import to start the file import task

- The import details provide the mapping type, import speed, import progress, time-consuming and the specific status of the current task set for each uploaded file, and can pause, resume, stop and other operations for each task

- If the import fails, you can view the specific reason

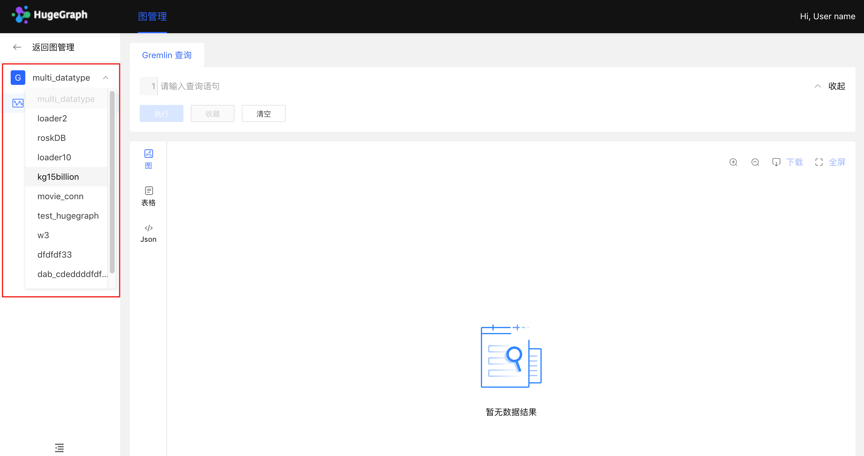

4.4 Data Analysis

4.4.1 Module entry

Left navigation:

4.4.2 Multi-graphs switching

By switching the entrance on the left, flexibly switch the operation space of multiple graphs

4.4.3 Graph Analysis and Processing

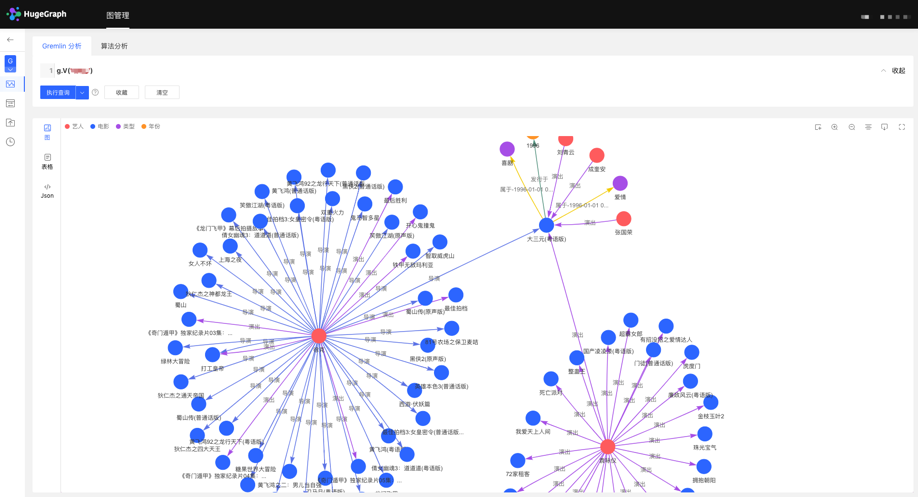

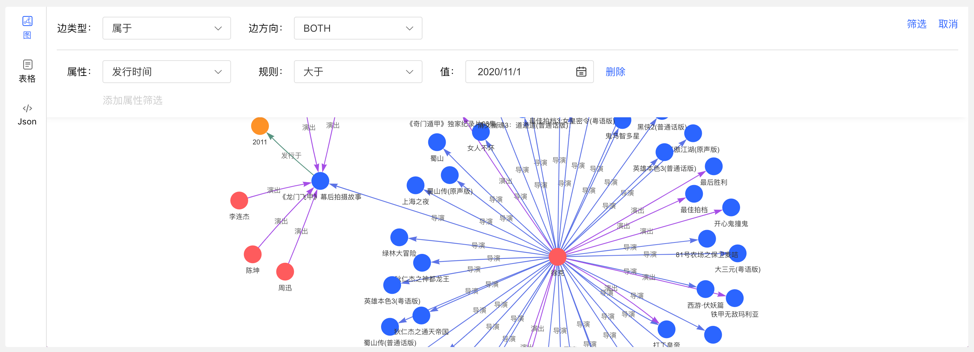

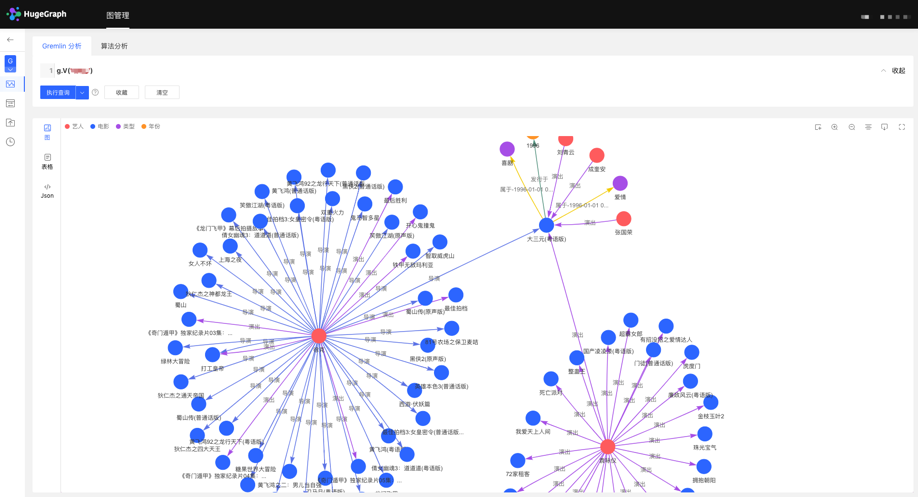

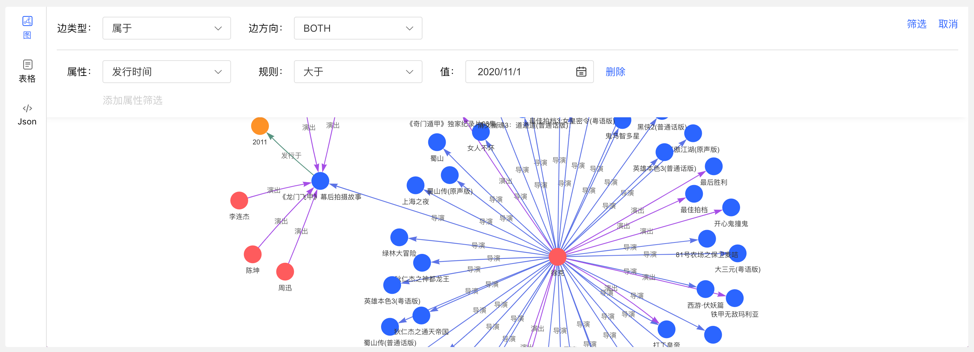

HugeGraph supports Gremlin, a graph traversal query language of Apache TinkerPop3. Gremlin is a general graph database query language. By entering Gremlin statements and clicking execute, you can perform query and analysis operations on graph data, and create and delete vertices/edges. vertex/edge attribute modification, etc.

After Gremlin query, below is the graph result display area, which provides 3 kinds of graph result display modes: [Graph Mode], [Table Mode], [Json Mode].

Support zoom, center, full screen, export and other operations.

【Picture Mode】

【Table mode】

【Json mode】

4.4.4 Data Details

Click the vertex/edge entity to view the data details of the vertex/edge, including vertex/edge type, vertex ID, attribute and corresponding value, expand the information display dimension of the graph, and improve the usability.

4.4.5 Multidimensional Path Query of Graph Results

In addition to the global query, an in-depth customized query and hidden operations can be performed for the vertices in the query result to realize customized mining of graph results.

Right-click a vertex, and the menu entry of the vertex appears, which can be displayed, inquired, hidden, etc.

- Expand: Click to display the vertices associated with the selected point.

- Query: By selecting the edge type and edge direction associated with the selected point, and then selecting its attributes and corresponding filtering rules under this condition, a customized path display can be realized.

- Hide: When clicked, hides the selected point and its associated edges.

Double-clicking a vertex also displays the vertex associated with the selected point.

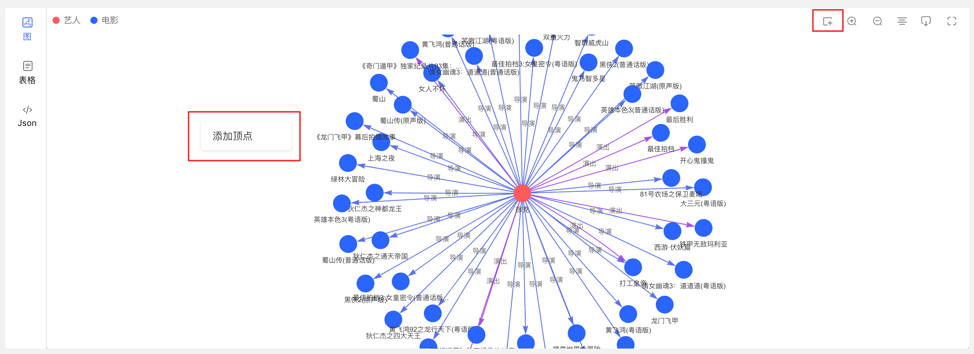

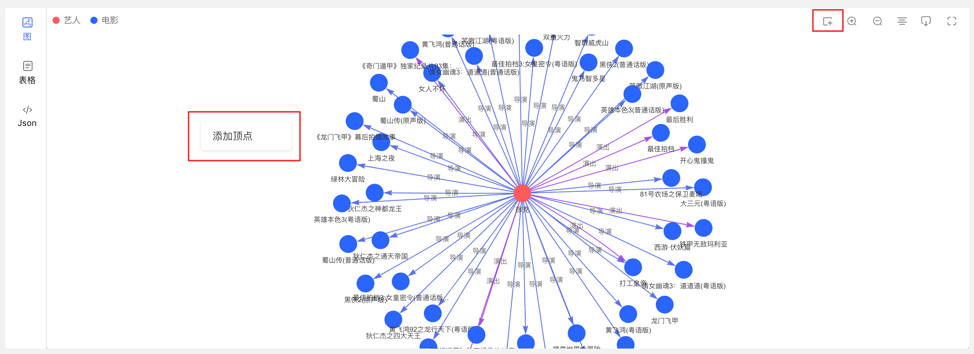

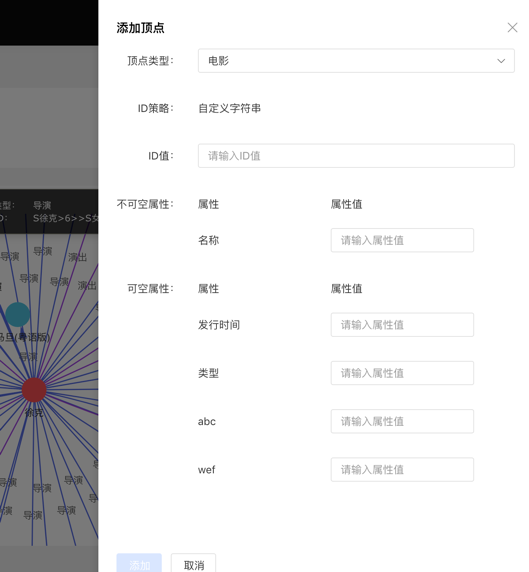

4.4.6 Add vertex/edge

4.4.6.1 Added vertex

In the graph area, two entries can be used to dynamically add vertices, as follows:

- Click on the graph area panel, the Add Vertex entry appears

- Click the first icon in the action bar in the upper right corner

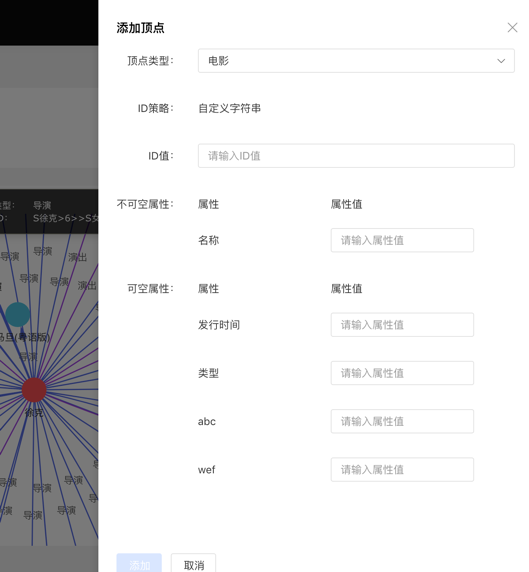

Complete the addition of vertices by selecting or filling in the vertex type, ID value, and attribute information.

The entry is as follows:

Add the vertex content as follows:

4.4.6.2 Add edge

Right-click a vertex in the graph result to add the outgoing or incoming edge of that point.

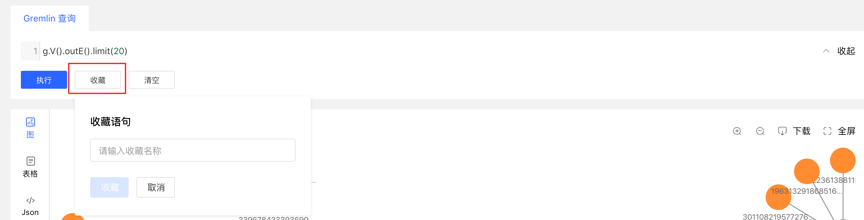

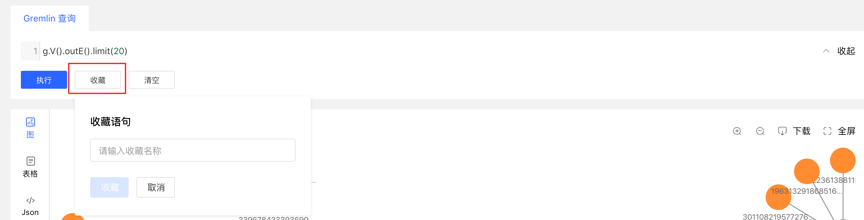

4.4.7 Execute the query of records and favorites

- Record each query record at the bottom of the graph area, including: query time, execution type, content, status, time-consuming, as well as [collection] and [load] operations, to achieve a comprehensive record of graph execution, with traces to follow, and Can quickly load and reuse execution content

- Provides the function of collecting sentences, which can be used to collect frequently used sentences, which is convenient for fast calling of high-frequency sentences.

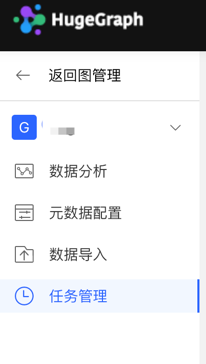

4.5 Task Management

4.5.1 Module entry

Left navigation:

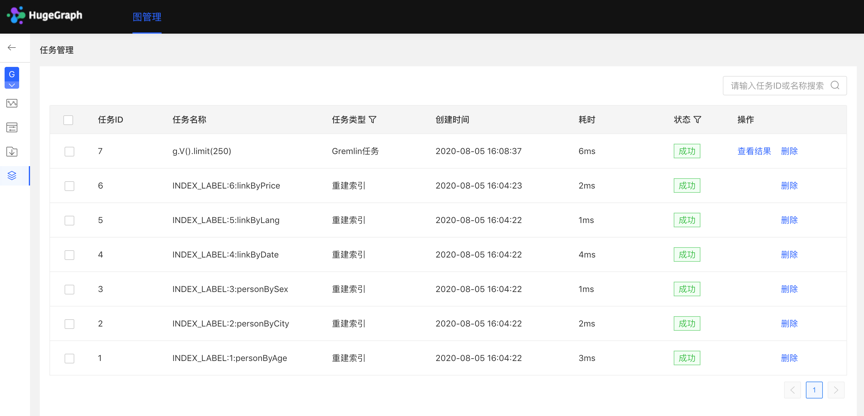

4.5.2 Task Management

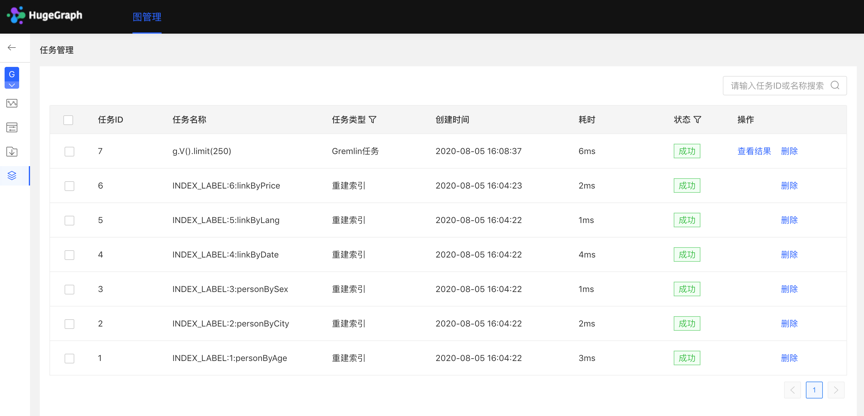

- Provide unified management and result viewing of asynchronous tasks. There are 4 types of asynchronous tasks, namely:

- gremlin: Gremlin tasks

- algorithm: OLAP algorithm task

- remove_schema: remove metadata

- rebuild_index: rebuild the index

- The list displays the asynchronous task information of the current graph, including task ID, task name, task type, creation time, time-consuming, status, operation, and realizes the management of asynchronous tasks.

- Support filtering by task type and status

- Support searching for task ID and task name

- Asynchronous tasks can be deleted or deleted in batches

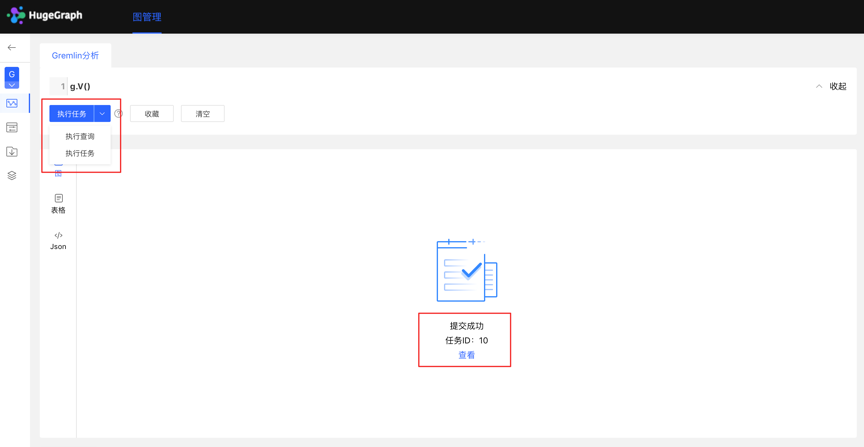

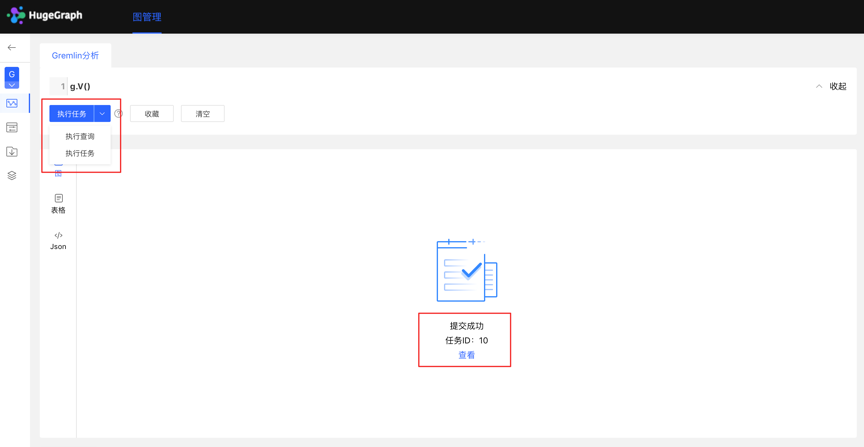

4.5.3 Gremlin asynchronous tasks

- Create a task

- The data analysis module currently supports two Gremlin operations, Gremlin query and Gremlin task; if the user switches to the Gremlin task, after clicking execute, an asynchronous task will be created in the asynchronous task center;

- Task submission

- After the task is submitted successfully, the graph area returns the submission result and task ID

- Mission details

- Provide [View] entry, you can jump to the task details to view the specific execution of the current task After jumping to the task center, the currently executing task line will be displayed directly

Click to view the entry to jump to the task management list, as follows:

- View the results

- The results are displayed in the form of JSON

4.5.4 OLAP algorithm tasks

There is no visual OLAP algorithm execution on Hubble. You can call the RESTful API to perform OLAP algorithm tasks, find the corresponding tasks by ID in the task management, and view the progress and results.

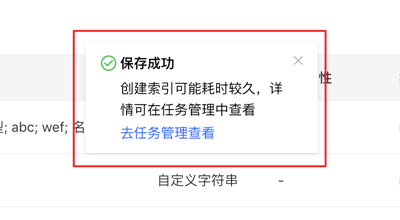

- Create a task

- In the metadata modeling module, when deleting metadata, an asynchronous task for deleting metadata can be created

- When editing an existing vertex/edge type operation, when adding an index, an asynchronous task of creating an index can be created

- Task details

- After confirming/saving, you can jump to the task center to view the details of the current task

5 Configuration

HugeGraph-Hubble can be configured through the conf/hugegraph-hubble.properties file.

5.1 Server Configuration

| Configuration Item | Default Value | Description |

|---|

hubble.host | 0.0.0.0 | The address that Hubble service binds to |

hubble.port | 8088 | The port that Hubble service listens on |

5.2 Gremlin Query Limits

These settings control query result limits to prevent memory issues:

| Configuration Item | Default Value | Description |

|---|

gremlin.suffix_limit | 250 | Maximum query suffix length |

gremlin.vertex_degree_limit | 100 | Maximum vertex degree to display |

gremlin.edges_total_limit | 500 | Maximum number of edges returned |

gremlin.batch_query_ids | 100 | ID batch query size |

3.2.2 - HugeGraph-Loader Quick Start

1 HugeGraph-Loader Overview

HugeGraph-Loader is the data import component of HugeGraph, which can convert data from various data sources into graph vertices and edges and import them into the graph database in batches.

Currently supported data sources include:

- Local disk file or directory, supports TEXT, CSV and JSON format files, supports compressed files

- HDFS file or directory supports compressed files

- Mainstream relational databases, such as MySQL, PostgreSQL, Oracle, SQL Server

Local disk files and HDFS files support resumable uploads.

It will be explained in detail below.

Note: HugeGraph-Loader requires HugeGraph Server service, please refer to HugeGraph-Server Quick Start to download and start Server

Testing Guide: For running HugeGraph-Loader tests locally, please refer to HugeGraph Toolchain Local Testing Guide

2 Get HugeGraph-Loader

There are two ways to get HugeGraph-Loader:

- Use docker image (Convenient for Test/Dev)

- Download the compiled tarball

- Clone source code then compile and install

2.1 Use Docker image (Convenient for Test/Dev)

We can deploy the loader service using docker run -itd --name loader hugegraph/loader:1.5.0. For the data that needs to be loaded, it can be copied into the loader container either by mounting -v /path/to/data/file:/loader/file or by using docker cp.

Alternatively, to start the loader using docker-compose, the command is docker-compose up -d. An example of the docker-compose.yml is as follows:

version: '3'

services:

server:

image: hugegraph/hugegraph:1.3.0

container_name: server

ports:

- 8080:8080

loader:

image: hugegraph/loader:1.3.0

container_name: loader

# mount your own data here

# volumes:

# - /path/to/data/file:/loader/file

The specific data loading process can be referenced under 4.5 User Docker to load data

Note:

The docker image of hugegraph-loader is a convenience release to start hugegraph-loader quickly, but not official distribution artifacts. You can find more details from ASF Release Distribution Policy.

Recommend to use release tag(like 1.5.0) for the stable version. Use latest tag to experience the newest functions in development.

2.2 Download the compiled archive

Download the latest version of the HugeGraph-Toolchain release package:

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-toolchain-incubating-{version}.tar.gz

tar zxf *hugegraph*.tar.gz

2.3 Clone source code to compile and install

Clone the latest version of HugeGraph-Loader source package:

# 1. get from github

git clone https://github.com/apache/hugegraph-toolchain.git

# 2. get from direct (e.g. here is 1.0.0, please choose the latest version)

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-toolchain-incubating-{version}-src.tar.gz

click to fold/collapse hwo to install ojdbc

Due to the license limitation of the Oracle OJDBC, you need to manually install ojdbc to the local maven repository.

Visit the Oracle jdbc downloads page. Select Oracle Database 12c Release 2 (12.2.0.1) drivers, as shown in the following figure.

After opening the link, select “ojdbc8.jar”.

Install ojdbc8 to the local maven repository, enter the directory where ojdbc8.jar is located, and execute the following command.

mvn install:install-file -Dfile=./ojdbc8.jar -DgroupId=com.oracle -DartifactId=ojdbc8 -Dversion=12.2.0.1 -Dpackaging=jar

Compile and generate tar package:

cd hugegraph-loader

mvn clean package -DskipTests

3 How to use

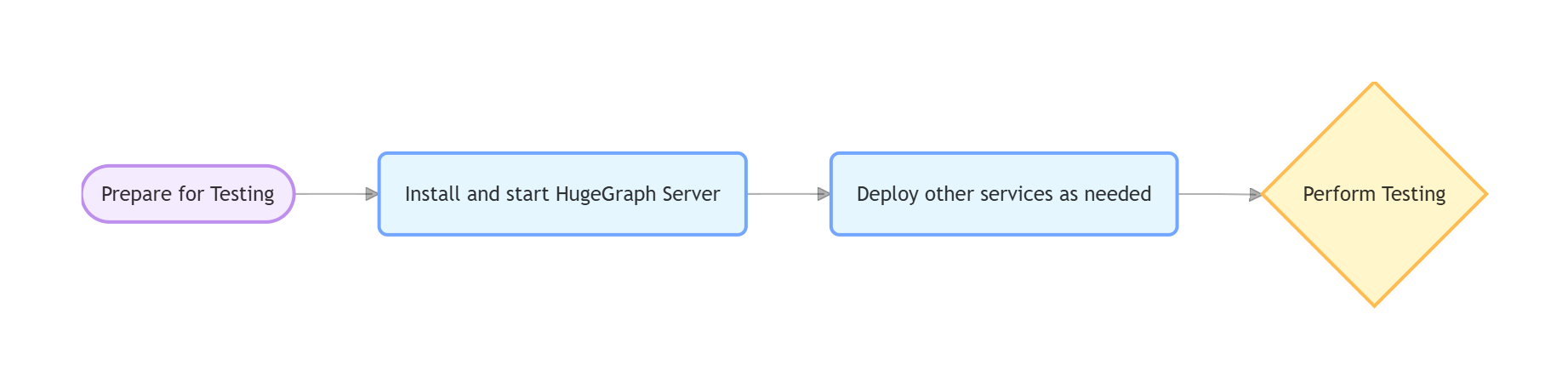

The basic process of using HugeGraph-Loader is divided into the following steps:

- Write graph schema

- Prepare data files

- Write input source map files

- Execute command import

3.1 Construct graph schema

This step is the modeling process. Users need to have a clear idea of their existing data and the graph model they want to create, and then write the schema to build the graph model.

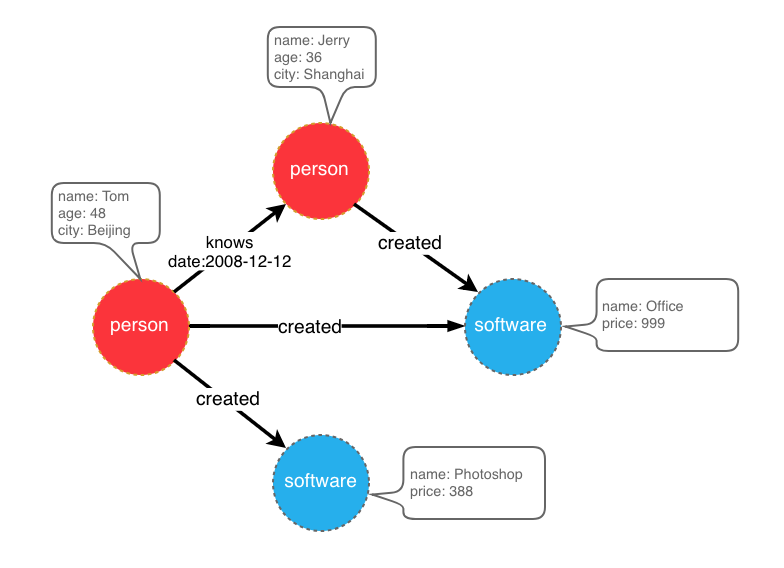

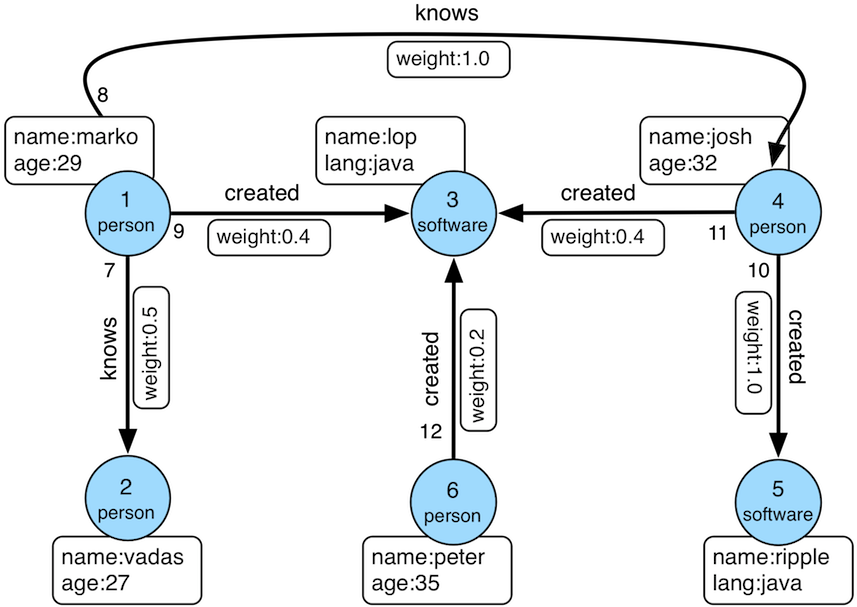

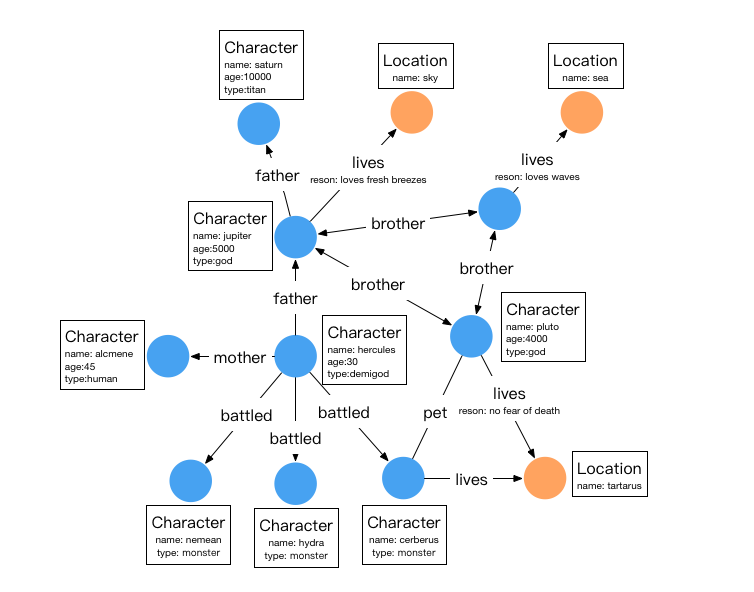

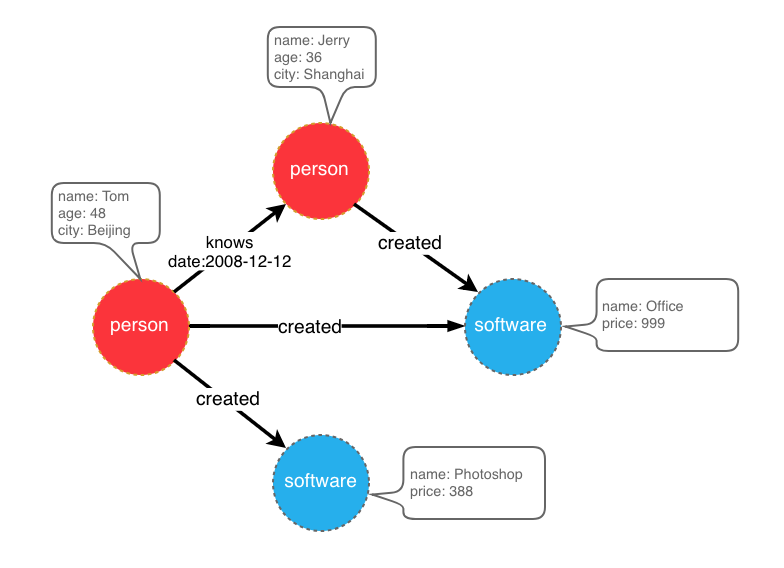

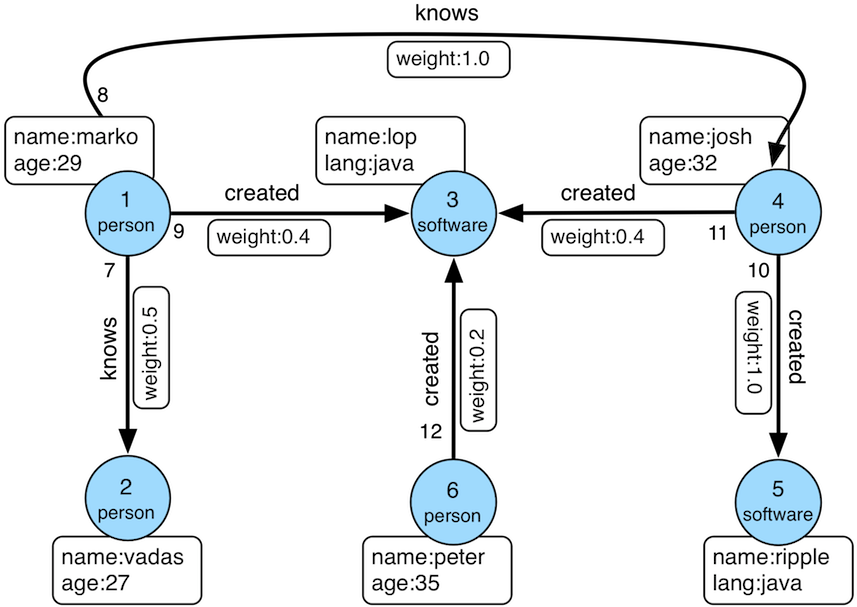

For example, if you want to create a graph with two types of vertices and two types of edges, the vertices are “people” and “software”, the edges are “people know people” and “people create software”, and these vertices and edges have some attributes, For example, the vertex “person” has: “name”, “age” and other attributes,

“Software” includes: “name”, “sale price” and other attributes; side “knowledge” includes: “date” attribute and so on.

graph model example

After designing the graph model, we can use groovy to write the definition of schema and save it to a file, here named schema.groovy.

// Create some properties

schema.propertyKey("name").asText().ifNotExist().create();

schema.propertyKey("age").asInt().ifNotExist().create();

schema.propertyKey("city").asText().ifNotExist().create();

schema.propertyKey("date").asText().ifNotExist().create();

schema.propertyKey("price").asDouble().ifNotExist().create();

// Create the person vertex type, which has three attributes: name, age, city, and the primary key is name

schema.vertexLabel("person").properties("name", "age", "city").primaryKeys("name").ifNotExist().create();

// Create a software vertex type, which has two properties: name, price, the primary key is name

schema.vertexLabel("software").properties("name", "price").primaryKeys("name").ifNotExist().create();

// Create the knows edge type, which goes from person to person

schema.edgeLabel("knows").sourceLabel("person").targetLabel("person").ifNotExist().create();