This is the multi-page printable view of this section. Click here to print.

GUIDES

1 - HugeGraph Architecture Overview

1 Overview

As a general-purpose graph database product, HugeGraph needs to possess basic graph database functionality. HugeGraph supports two types of graph computation: OLTP and OLAP. For OLTP, it implements the Apache TinkerPop3 framework and supports the Gremlin and Cypher query languages. It comes with a complete application toolchain and provides a plugin-based backend storage driver framework.

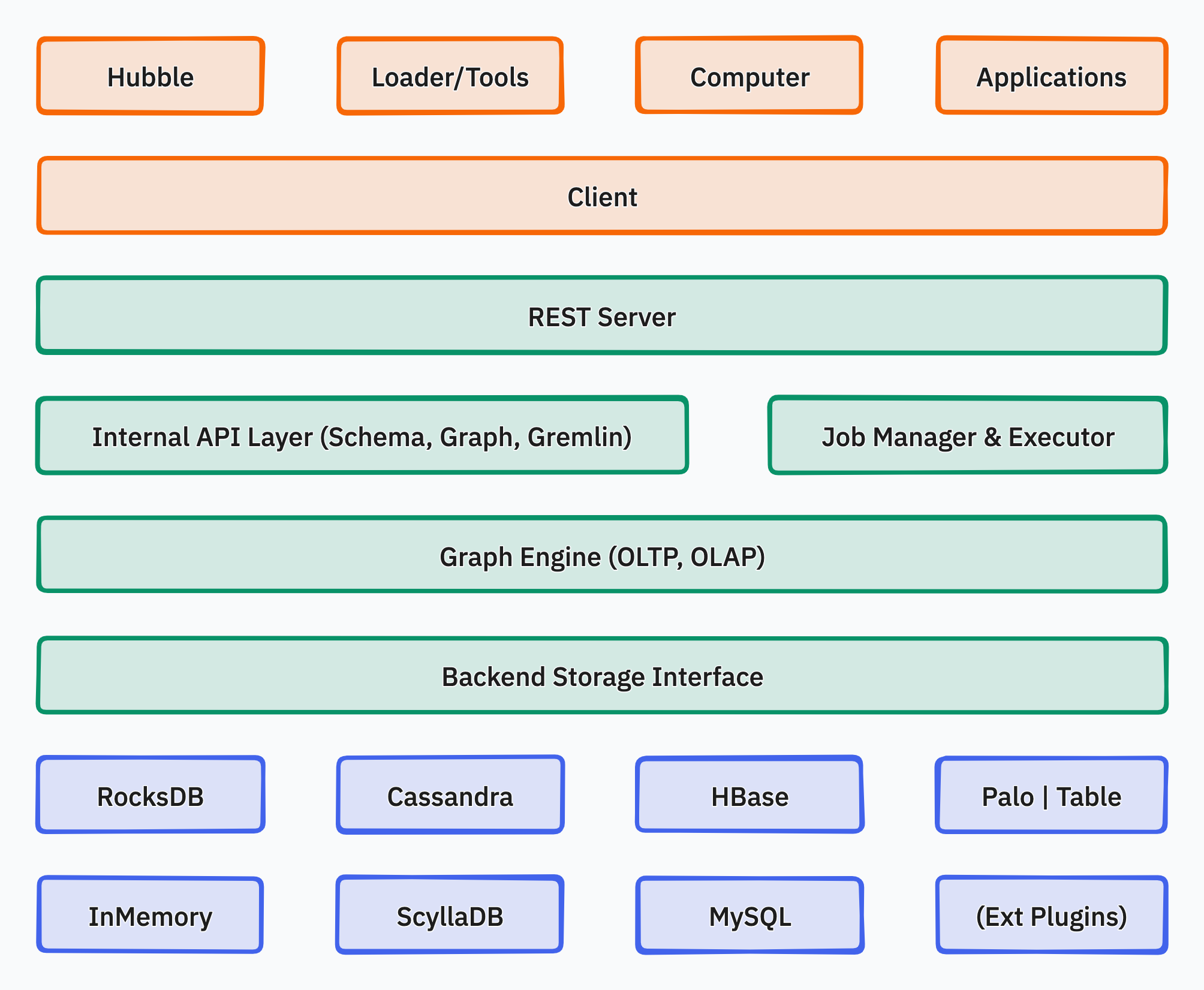

Below is the overall architecture diagram of HugeGraph:

HugeGraph consists of three layers of functionality: the application layer, the graph engine layer, and the storage layer.

- Application Layer:

- Hubble: An all-in-one visual analytics platform that covers the entire process of data modeling, rapid data import, online and offline analysis of data, and unified management of graphs. It provides a guided workflow for operating graph applications.

- Loader: A data import component that can transform data from various sources into vertices and edges and bulk import them into the graph database.

- Tools: Command-line tools for deploying, managing, and backing up/restoring data in HugeGraph.

- Computer: A distributed graph processing system (OLAP) that implements Pregel. It can run on Kubernetes.

- Client: HugeGraph client written in Java. Users can use the client to operate HugeGraph using Java code. Support for other languages such as Python, Go, and C++ may be provided in the future.

- Graph Engine Layer:

- REST Server: Provides a RESTful API for querying graph/schema information, supports the Gremlin and Cypher query languages, and offers APIs for service monitoring and operations.

- Graph Engine: Supports both OLTP and OLAP graph computation types, with OLTP implementing the Apache TinkerPop3 framework.

- Backend Interface: Implements the storage of graph data to the backend.

- Storage Layer:

- Storage Backend: Supports multiple built-in storage backends (RocksDB/MySQL/HBase/…) and allows users to extend custom backends without modifying the existing source code.

2 - HugeGraph Design Concepts

1. Property Graph

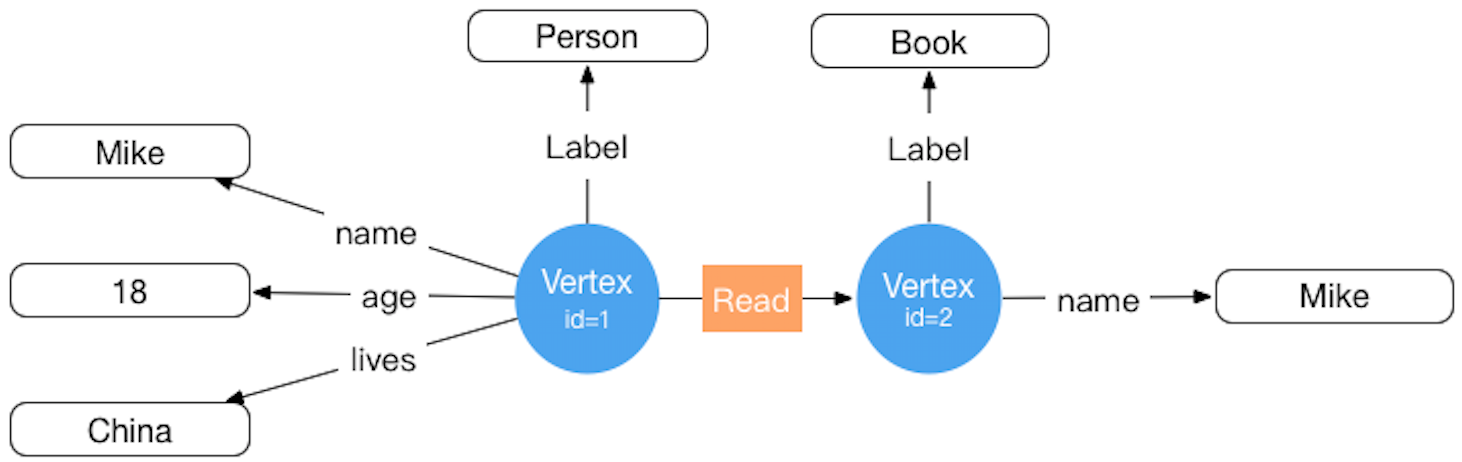

There are two common graph data representation models, namely the RDF (Resource Description Framework) model and the Property Graph (Property Graph) model. Both RDF and Property Graph are the most basic and well-known graph representation modes, and both can represent entity-relationship modeling of various graphs. RDF is a W3C standard, while Property Graph is an industry standard and is widely supported by graph database vendors. HugeGraph currently uses Property Graph.

The storage concept model corresponding to HugeGraph is also designed with reference to Property Graph. For specific examples, see the figure below: ( This figure is outdated for the old version design, please ignore it and update it later )

Inside HugeGraph, each vertex/edge is identified by a unique VertexId/EdgeId, and the attributes are stored inside the corresponding vertex/edge. The relationship/mapping between vertices is stored through edges.

When the vertex attribute value is stored by edge pointer, if you want to update a vertex-specific attribute value, you can directly write it by overwriting. The disadvantage is that the VertexId is redundantly stored; if you want to update the attribute of the relationship, you need to use the read-and-modify method , read all attributes first, modify some attributes, and then write to the storage system, the update efficiency is low. According to experience, there are more requirements for modifying vertex attributes, but less for edge attributes. For example, calculations such as PageRank and Graph Cluster require frequent modification of vertex attribute values.

2. Graph Partition Scheme

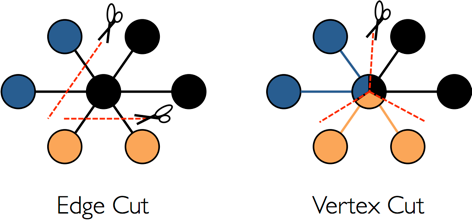

For distributed graph databases, there are two partition storage methods for graphs: Edge Cut and Vertex Cut, as shown in the following figure. When using the Edge Cut method to store graphs, any vertex will only appear on one machine, while edges may be distributed on different machines. This storage method may lead to multiple storage of edges. When using the Vertex Cut method to store graphs, any edge will only appear on one machine, and each same point may be distributed to different machines. This storage method may result in multiple storage of vertices.

The EdgeCut partition scheme can support high-performance insert and update operations, while the VertexCut partition scheme is more suitable for static graph query analysis, so EdgeCut is suitable for OLTP graph query, and VertexCut is more suitable for OLAP graph query. HugeGraph currently adopts the partition scheme of EdgeCut.

3. VertexId Strategy

Vertex of HugeGraph supports three ID strategies. Different VertexLabels in the same graph database can use different Id strategies. Currently, the Id strategies supported by HugeGraph are:

- Automatic generation (AUTOMATIC): Use the Snowflake algorithm to automatically generate a globally unique Id, Long type;

- Primary Key (PRIMARY_KEY): Generate Id through VertexLabel+PrimaryKeyValues, String type;

- Custom (CUSTOMIZE_STRING|CUSTOMIZE_NUMBER): User-defined Id, which is divided into two types: String and Long, and you need to ensure the uniqueness of the Id yourself;

The default Id policy is AUTOMATIC, if the user calls the primaryKeys() method and sets the correct PrimaryKeys, the PRIMARY_KEY policy is automatically enabled. After enabling the PRIMARY_KEY strategy, HugeGraph can implement data deduplication based on PrimaryKeys.

- AUTOMATIC ID Policy

schema.vertexLabel("person")

.useAutomaticId()

.properties("name", "age", "city")

.create();

graph.addVertex(T.label, "person","name", "marko", "age", 18, "city", "Beijing");

- PRIMARY_KEY ID policy

schema.vertexLabel("person")

.usePrimaryKeyId()

.properties("name", "age", "city")

.primaryKeys("name", "age")

.create();

graph.addVertex(T.label, "person","name", "marko", "age", 18, "city", "Beijing");

- CUSTOMIZE_STRING ID Policy

schema.vertexLabel("person")

.useCustomizeStringId()

.properties("name", "age", "city")

.create();

graph.addVertex(T.label, "person", T.id, "123456", "name", "marko","age", 18, "city", "Beijing");

- CUSTOMIZE_NUMBER ID Policy

schema.vertexLabel("person")

.useCustomizeNumberId()

.properties("name", "age", "city")

.create();

graph.addVertex(T.label, "person", T.id, 123456, "name", "marko","age", 18, "city", "Beijing");

If users need Vertex deduplication, there are three options:

- Adopt PRIMARY_KEY strategy, automatic overwriting, suitable for batch insertion of large amount of data, users cannot know whether overwriting has occurred

- Adopt AUTOMATIC strategy, read-and-modify, suitable for small data insertion, users can clearly know whether overwriting occurs

- Using the CUSTOMIZE_STRING or CUSTOMIZE_NUMBER strategy, the user guarantees the uniqueness

4. EdgeId policy

The EdgeId of HugeGraph is composed of srcVertexId + edgeLabel + sortKey + tgtVertexId. Among them sortKey is an important concept of HugeGraph.

There are two reasons for adding Edge sortKeyas the unique ID of Edge:

- If there are multiple edges of the same Label between two vertices, they can be sortKeydistinguished by

- For SuperNode nodes, it can be sortKeysorted and truncated by.

Since EdgeId is composed of srcVertexId + edgeLabel + sortKey + tgtVertexId, HugeGraph will automatically overwrite when the same Edge is inserted

multiple times to achieve deduplication. It should be noted that the properties of Edge will also be overwritten in the batch insert mode.

In addition, because HugeGraph’s EdgeId adopts an automatic deduplication strategy, HugeGraph considers that there is only one edge in the case of self-loop (a vertex has an edge pointing to itself). The graph has two edges.

The edges of HugeGraph only support directed edges, and undirected edges can be realized by creating two edges, Out and In.

5. HugeGraph transaction overview

TinkerPop transaction overview

A TinkerPop transaction refers to a unit of work that performs operations on the database. A set of operations within a transaction either succeeds or all fail. For a detailed introduction, please refer to the official documentation of TinkerPop: http://tinkerpop.apache.org/docs/current/reference/#transactions:http://tinkerpop.apache.org/docs/current/reference/#transactions

TinkerPop transaction overview

- open open transaction

- commit commit transaction

- rollback rollback transaction

- close closes the transaction

TinkerPop transaction specification

- The transaction must be explicitly committed before it can take effect (the modification operation can only be seen by the query in this transaction if it is not committed)

- A transaction must be opened before it can be committed or rolled back

- If the transaction setting is automatically turned on, there is no need to explicitly turn it on (the default method), if it is set to be turned on manually, it must be turned on explicitly

- When the transaction is closed, you can set three modes: automatic commit, automatic rollback (default mode), manual (explicit shutdown is prohibited), etc.

- The transaction must be closed after committing or rolling back

- The transaction must be open after the query

- Transactions (non-threaded tx) must be thread-isolated, and multi-threaded operations on the same transaction do not affect each other

For more transaction specification use cases, see: Transaction Test

HugeGraph transaction implementation

- All operations in a transaction either succeed or fail

- A transaction can only read what has been committed by another transaction (Read committed)

- All uncommitted operations can be queried in this transaction, including:

- Adding a vertex can query the vertex

- Delete a vertex to filter out the vertex

- Deleting a vertex can filter out the related edges of the vertex

- Adding an edge can query the edge

- Delete edge can filter out the edge

- Adding/modifying (vertex, edge) attributes can take effect when querying

- Delete (vertex, edge) attributes can take effect at query time

- All uncommitted operations become invalid after the transaction is rolled back, including:

- Adding and deleting vertices and edges

- Addition/modification, deletion of attributes

Example: One transaction cannot read another transaction’s uncommitted content

static void testUncommittedTx(final HugeGraph graph) throws InterruptedException {

final CountDownLatch latchUncommit = new CountDownLatch(1);

final CountDownLatch latchRollback = new CountDownLatch(1);

Thread thread = new Thread(() -> {

// this is a new transaction in the new thread

graph.tx().open();

System.out.println("current transaction operations");

Vertex james = graph.addVertex(T.label, "author",

"id", 1, "name", "James Gosling",

"age", 62, "lived", "Canadian");

Vertex java = graph.addVertex(T.label, "language", "name", "java",

"versions", Arrays.asList(6, 7, 8));

james.addEdge("created", java);

// we can query the uncommitted records in the current transaction

System.out.println("current transaction assert");

assert graph.vertices().hasNext() == true;

assert graph.edges().hasNext() == true;

latchUncommit.countDown();

try {

latchRollback.await();

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

System.out.println("current transaction rollback");

graph.tx().rollback();

});

thread.start();

// query none result in other transaction when not commit()

latchUncommit.await();

System.out.println("other transaction assert for uncommitted");

assert !graph.vertices().hasNext();

assert !graph.edges().hasNext();

latchRollback.countDown();

thread.join();

// query none result in other transaction after rollback()

System.out.println("other transaction assert for rollback");

assert !graph.vertices().hasNext();

assert !graph.edges().hasNext();

}

Principle of transaction realization

- The server internally realizes isolation by binding transactions to threads (ThreadLocal)

- The uncommitted content of this transaction overwrites the old data in chronological order for this transaction to query the latest version of data

- The bottom layer relies on the back-end database to ensure transaction atomicity (for example, the batch interface of Cassandra/RocksDB guarantees atomicity)

Notice

The RESTful API does not expose the transaction interface for the time being

TinkerPop API allows open transactions, which are automatically closed when the request is completed (Gremlin Server forces close)

3 - HugeGraph Plugin mechanism and plug-in extension process

Background

- HugeGraph is not only open source and open, but also simple and easy to use. General users can easily add plug-in extension functions without changing the source code.

- HugeGraph supports a variety of built-in storage backends, and also allows users to extend custom backends without changing the existing source code.

- HugeGraph supports full-text search. The full-text search function involves word segmentation in various languages. Currently, there are 8 built-in Chinese word breakers, and it also allows users to expand custom word breakers without changing the existing source code.

Scalable dimension

Currently, the plug-in method provides extensions in the following dimensions:

- backend storage

- serializer

- Custom configuration items

- tokenizer

Plug-in implementation mechanism

- HugeGraph provides a plug-in interface HugeGraphPlugin, which supports plug-in through the Java SPI mechanism

- HugeGraph provides four extension registration functions: registerOptions(), registerBackend(), registerSerializer(),registerAnalyzer()

- The plug-in implementer implements the corresponding Options, Backend, Serializer or Analyzer interface

- The plug-in implementer implements register()the method of the HugeGraphPlugin interface, registers the specific implementation class listed in the above point 3 in this method, and packs it into a jar package

- The plug-in user puts the jar package in the HugeGraph Server installation directory plugins, modifies the relevant configuration items to the plug-in custom value, and restarts to take effect

Plug-in implementation process example

1 Create a new maven project

1.1 Name the project name: hugegraph-plugin-demo

1.2 Add hugegraph-core Jar package dependencies

The details of maven pom.xml are as follows:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.apache.hugegraph</groupId>

<artifactId>hugegraph-plugin-demo</artifactId>

<version>1.0.0</version>

<packaging>jar</packaging>

<name>hugegraph-plugin-demo</name>

<dependencies>

<dependency>

<groupId>org.apache.hugegraph</groupId>

<artifactId>hugegraph-core</artifactId>

<version>${project.version}</version>

</dependency>

</dependencies>

</project>

2 Realize extended functions

2.1 Extending a custom backend

2.1.1 Implement the interface BackendStoreProvider

- Realizable interfaces:

org.apache.hugegraph.backend.store.BackendStoreProvider - Or inherit an abstract class:

org.apache.hugegraph.backend.store.AbstractBackendStoreProvider

Take the RocksDB backend RocksDBStoreProvider as an example:

public class RocksDBStoreProvider extends AbstractBackendStoreProvider {

protected String database() {

return this.graph().toLowerCase();

}

@Override

protected BackendStore newSchemaStore(String store) {

return new RocksDBSchemaStore(this, this.database(), store);

}

@Override

protected BackendStore newGraphStore(String store) {

return new RocksDBGraphStore(this, this.database(), store);

}

@Override

public String type() {

return "rocksdb";

}

@Override

public String version() {

return "1.0";

}

}

2.1.2 Implement interface BackendStore

The BackendStore interface is defined as follows:

public interface BackendStore {

// Store name

public String store();

// Database name

public String database();

// Get the parent provider

public BackendStoreProvider provider();

// Open/close database

public void open(HugeConfig config);

public void close();

// Initialize/clear database

public void init();

public void clear();

// Add/delete data

public void mutate(BackendMutation mutation);

// Query data

public Iterator<BackendEntry> query(Query query);

// Transaction

public void beginTx();

public void commitTx();

public void rollbackTx();

// Get metadata by key

public <R> R metadata(HugeType type, String meta, Object[] args);

// Backend features

public BackendFeatures features();

// Generate an id for a specific type

public Id nextId(HugeType type);

}

2.1.3 Extending custom serializers

The serializer must inherit the abstract class: org.apache.hugegraph.backend.serializer.AbstractSerializer

( implements GraphSerializer, SchemaSerializer) The main interface is defined as follows:

public interface GraphSerializer {

public BackendEntry writeVertex(HugeVertex vertex);

public BackendEntry writeVertexProperty(HugeVertexProperty<?> prop);

public HugeVertex readVertex(HugeGraph graph, BackendEntry entry);

public BackendEntry writeEdge(HugeEdge edge);

public BackendEntry writeEdgeProperty(HugeEdgeProperty<?> prop);

public HugeEdge readEdge(HugeGraph graph, BackendEntry entry);

public BackendEntry writeIndex(HugeIndex index);

public HugeIndex readIndex(HugeGraph graph, ConditionQuery query, BackendEntry entry);

public BackendEntry writeId(HugeType type, Id id);

public Query writeQuery(Query query);

}

public interface SchemaSerializer {

public BackendEntry writeVertexLabel(VertexLabel vertexLabel);

public VertexLabel readVertexLabel(HugeGraph graph, BackendEntry entry);

public BackendEntry writeEdgeLabel(EdgeLabel edgeLabel);

public EdgeLabel readEdgeLabel(HugeGraph graph, BackendEntry entry);

public BackendEntry writePropertyKey(PropertyKey propertyKey);

public PropertyKey readPropertyKey(HugeGraph graph, BackendEntry entry);

public BackendEntry writeIndexLabel(IndexLabel indexLabel);

public IndexLabel readIndexLabel(HugeGraph graph, BackendEntry entry);

}

2.1.4 Extend custom configuration items

When adding a custom backend, it may be necessary to add new configuration items. The implementation process mainly includes:

- Add a configuration item container class and implement the interface

org.apache.hugegraph.config.OptionHolder - Provide a singleton method

public static OptionHolder instance(), and call the method when the object is initializedOptionHolder.registerOptions() - Add configuration item declaration, single-value configuration item type is

ConfigOption, multi-value configuration item type isConfigListOption

Take the RocksDB configuration item definition as an example:

public class RocksDBOptions extends OptionHolder {

private RocksDBOptions() {

super();

}

private static volatile RocksDBOptions instance;

public static synchronized RocksDBOptions instance() {

if (instance == null) {

instance = new RocksDBOptions();

instance.registerOptions();

}

return instance;

}

public static final ConfigOption<String> DATA_PATH =

new ConfigOption<>(

"rocksdb.data_path",

"The path for storing data of RocksDB.",

disallowEmpty(),

"rocksdb-data"

);

public static final ConfigOption<String> WAL_PATH =

new ConfigOption<>(

"rocksdb.wal_path",

"The path for storing WAL of RocksDB.",

disallowEmpty(),

"rocksdb-data"

);

public static final ConfigListOption<String> DATA_DISKS =

new ConfigListOption<>(

"rocksdb.data_disks",

false,

"The optimized disks for storing data of RocksDB. " +

"The format of each element: `STORE/TABLE: /path/to/disk`." +

"Allowed keys are [graph/vertex, graph/edge_out, graph/edge_in, " +

"graph/secondary_index, graph/range_index]",

null,

String.class,

ImmutableList.of()

);

}

2.2 Extend custom tokenizer

The tokenizer needs to implement the interface org.apache.hugegraph.analyzer.Analyzer, take implementing a SpaceAnalyzer space tokenizer as an example.

package org.apache.hugegraph.plugin;

import java.util.Arrays;

import java.util.HashSet;

import java.util.Set;

import org.apache.hugegraph.analyzer.Analyzer;

public class SpaceAnalyzer implements Analyzer {

@Override

public Set<String> segment(String text) {

return new HashSet<>(Arrays.asList(text.split(" ")));

}

}

3. Implement the plug-in interface and register it

The plug-in registration entry is HugeGraphPlugin.register(), the custom plug-in must implement this interface method, and register the extension

items defined above inside it. The interface org.apache.hugegraph.plugin.HugeGraphPlugin is defined as follows:

public interface HugeGraphPlugin {

public String name();

public void register();

public String supportsMinVersion();

public String supportsMaxVersion();

}

And HugeGraphPlugin provides 4 static methods for registering extensions:

- registerOptions(String name, String classPath): register configuration items

- registerBackend(String name, String classPath): register backend (BackendStoreProvider)

- registerSerializer(String name, String classPath): register serializer

- registerAnalyzer(String name, String classPath): register tokenizer

The following is an example of registering the SpaceAnalyzer tokenizer:

package org.apache.hugegraph.plugin;

public class DemoPlugin implements HugeGraphPlugin {

@Override

public String name() {

return "demo";

}

@Override

public void register() {

HugeGraphPlugin.registerAnalyzer("demo", SpaceAnalyzer.class.getName());

}

}

4. Configure SPI entry

- Make sure the services directory exists: hugegraph-plugin-demo/resources/META-INF/services

- Create a text file in the services directory: org.apache.hugegraph.plugin.HugeGraphPlugin

- The content of the file is as follows: org.apache.hugegraph.plugin.DemoPlugin

5. Make Jar package

Through maven packaging, execute the command in the project directory mvn package, and a Jar package file will be generated in the

target directory. Copy the Jar package to the plugins directory when using it, and restart the service to take effect.

4 - Backup and Restore

Description

Backup and Restore are functions of backup map and restore map. The data backed up and restored includes metadata (schema) and graph data (vertex and edge).

Backup

Export the metadata and graph data of a graph in the HugeGraph system in JSON format.

Restore

Re-import the data in JSON format exported by Backup to a graph in the HugeGraph system.

Restore has two modes:

- In Restoring mode, the metadata and graph data exported by Backup are restored to the HugeGraph system intact. It can be used for graph backup and recovery, and the general target graph is a new graph (without metadata and graph data). for example:

- System upgrade, first back up the map, then upgrade the system, and finally restore the map to the new system

- Graph migration, from a HugeGraph system, use the Backup function to export the graph, and then use the Restore function to import the graph into another HugeGraph system

- In the Merging mode, the metadata and graph data exported by Backup are imported into another graph that already has metadata or graph data. During the process, the ID of the metadata may change, and the IDs of vertices and edges will also change accordingly.

- Can be used to merge graphs

Instructions

You can use hugegraph-tools to backup and restore graphs.

Backup

bin/hugegraph backup -t all -d data

This command backs up all the metadata and graph data of the hugegraph graph of http://127.0.0.1 to the data directory.

Backup works fine in all three graph modes

Restore

Restore has two modes: RESTORING and MERGING. Before backup, you must first set the graph mode according to your needs.

Step 1: View and set graph mode

bin/hugegraph graph-mode-get

This command is used to view the current graph mode, including: NONE, RESTORING, MERGING.

bin/hugegraph graph-mode-set -m RESTORING

This command is used to set the graph mode. Before Restore, it can be set to RESTORING or MERGING mode. In the example, it is set to RESTORING.

Step 2: Restore data

bin/hugegraph restore -t all -d data

This command re-imports all metadata and graph data in the data directory to the hugegraph graph at http://127.0.0.1.

Step 3: Restoring Graph Mode

bin/hugegraph graph-mode-set -m NONE

This command is used to restore the graph mode to NONE.

So far, a complete graph backup and graph recovery process is over.

help

For detailed usage of backup and restore commands, please refer to the hugegraph-tools documentation.

API description for Backup/Restore usage and implementation

Backup

Backup uses the corresponding list(GET) API export of metadata and graph data, and no new API is added.

Restore

Restore uses the corresponding create(POST) API imports for metadata and graph data, and does not add new APIs.

There are two different modes for Restore: Restoring and Merging. In addition, there is a regular mode of NONE (default), the differences are as follows:

- In None mode, the writing of metadata and graph data is normal, please refer to the function description. special:

- ID is not allowed when metadata (schema) is created

- Graph data (vertex) is not allowed to specify an ID when the id strategy is Automatic

- Restoring mode, restoring to a new graph, in particular:

- ID is allowed to be specified when metadata (schema) is created

- Graph data (vertex) allows specifying an ID when the id strategy is Automatic

- Merging mode, merging into a graph with existing metadata and graph data, in particular:

- ID is not allowed when metadata (schema) is created

- Graph data (vertex) allows specifying an ID when the id strategy is Automatic

Normally, the graph mode is None. When you need to restore the graph, you need to temporarily change the graph mode to Restoring mode or Merging mode as needed, and when the Restore is completed, restore the graph mode to None.

The implemented RESTful API for setting graph mode is as follows:

View the schema of a graph. This operation requires administrator privileges

Method & Url

GET http://localhost:8080/graphs/{graph}/mode

Response Status

200

Response Body

{

"mode": "NONE"

}

Legal graph modes include: NONE, RESTORING, MERGING

Set the mode of a graph. ““This operation requires administrator privileges**

Method & Url

PUT http://localhost:8080/graphs/{graph}/mode

Request Body

"RESTORING"

Legal graph modes include: NONE, RESTORING, MERGING

Response Status

200

Response Body

{

"mode": "RESTORING"

}

5 - FAQ

How to choose the back-end storage? Choose RocksDB or Cassandra or Hbase or Mysql?

Judge according to your specific needs. Generally, if the stand-alone machine or the data volume is < 10 billion, RocksDB is recommended, and other back-end clusters that use distributed storage are recommended.

Prompt when starting the service:

xxx (core dumped) xxxPlease check if the JDK version is Java 11, at least Java 8 is required

The service is started successfully, but there is a prompt similar to “Unable to connect to the backend or the connection is not open” when operating the graph

init-storeBefore starting the service for the first time, you need to use the initialization backend first , and subsequent versions will prompt more clearly and directly.

Do all backends need to be executed before use init-store, and can the serialization options be filled in at will?

Except memorynot required, other backends are required, such as:

cassandra,hbaseand,rocksdb, etc. Serialization needs to be one-to-one correspondence and cannot be filled in at will.Execution

init-storeerror:Exception in thread "main" java.lang.UnsatisfiedLinkError: /tmp/librocksdbjni3226083071221514754.so: /usr/lib64/libstdc++.so.6: version `GLIBCXX_3.4.10' not found (required by /tmp/librocksdbjni3226083071221514754.so)RocksDB requires gcc 4.3.0 (GLIBCXX_3.4.10) and above

The error

NoHostAvailableExceptionoccurred while executinginit-store.sh.NoHostAvailableExceptionmeans that theCassandraservice cannot be connected to. If you are sure that you want to use the Cassandra backend, please install and start this service first. As for the message itself, it may not be clear enough, and we will update the documentation to provide further explanation.The

bindirectory containsstart-hugegraph.sh,start-restserver.shandstart-gremlinserver.sh. These scripts seem to be related to startup. Which one should be used?Since version 0.3.3, GremlinServer and RestServer have been merged into HugeGraphServer. To start, use start-hugegraph.sh. The latter two will be removed in future versions.

Two graphs are configured, the names are

hugegraphandhugegraph1, and the command to start the service isstart-hugegraph.sh. Is only the hugegraph graph opened?start-hugegraph.shwill open all graphs under the graphs ofgremlin-server.yaml. The two have no direct relationship in nameAfter the service starts successfully, garbled characters are returned when using

curlto query all verticesThe batch vertices/edges returned by the server are compressed (gzip), and can be redirected to

gunzipfor decompression (curl http://example | gunzip), or can be sent with thepostmanofFirefoxor therestletplug-in of Chrome browser. request, the response data will be decompressed automatically.When using the vertex Id to query the vertex through the

RESTful API, it returns empty, but the vertex does existCheck the type of the vertex ID. If it is a string type, the “id” part of the API URL needs to be enclosed in double quotes, while for numeric types, it is not necessary to enclose the ID in quotes.

Vertex Id has been double quoted as required, but querying the vertex via the RESTful API still returns empty

Check whether the vertex id contains

+,space,/,?,%,&, and=reserved characters of theseURLs. If they exist, they need to be encoded. The following table gives the coded values:special character | encoded value ------------------| ------------- + | %2B space | %20 / | %2F ? | %3F % | %25 # | %23 & | %26 = | %3DTimeout when querying vertices or edges of a certain category (

query by label)Since the amount of data belonging to a certain label may be relatively large, please add a limit limit.

It is possible to operate the graph through the

RESTful API, but when sendingGremlinstatements, an error is reported:Request Failed(500)It may be that the configuration of

GremlinServeris wrong, check whether thehostandportofgremlin-server.yamlmatch thegremlinserver.urlofrest-server.properties, if they do not match, modify them, and then Restart the service.When using

Loaderto import data, aSocket Timeoutexception occurs, and thenLoaderis interruptedContinuously importing data will put too much pressure on the

Server, which will cause some requests to time out. The pressure onServercan be appropriately relieved by adjusting the parameters ofLoader(such as: number of retries, retry interval, error tolerance, etc.), and reduce the frequency of this problem.How to delete all vertices and edges. There is no such interface in the RESTful API. Calling

g.V().drop()ofgremlinwill report an errorVertices in transaction have reached capacity xxxAt present, there is really no good way to delete all the data. If the user deploys the

Serverand the backend by himself, he can directly clear the database and restart theServer. You can use the paging API or scan API to get all the data first, and then delete them one by one.The database has been cleared and

init-storehas been executed, but when trying to add a schema, the prompt “xxx has existed” appeared.There is a cache in the

HugeGraphServer, and it is necessary to restart theServerwhen the database is cleared, otherwise the residual cache will be inconsistent.An error is reported during the process of inserting vertices or edges:

Id max length is 128, but got xxx {yyy}orBig id max length is 32768, but got xxxIn order to ensure query performance, the current backend storage limits the length of the id column. The vertex id cannot exceed 128 bytes, the edge id cannot exceed 32768 bytes, and the index id cannot exceed 128 bytes.

Is there support for nested attributes, and if not, are there any alternatives?

Nested attributes are currently not supported. Alternative: Nested attributes can be taken out as individual vertices and connected with edges.

Can an

EdgeLabelconnect multiple pairs ofVertexLabel, such as “investment” relationship, which can be “individual” investing in “enterprise”, or “enterprise” investing in “enterprise”?An

EdgeLabeldoes not support connecting multiple pairs ofVertexLabels, users need to split theEdgeLabelinto finer details, such as: “personal investment”, “enterprise investment”.Prompt

HTTP 415 Unsupported Media Typewhen sending a request throughRestAPIContent-Type: application/jsonneeds to be specified in the request header

Other issues can be searched in the issue area of the corresponding project, such as Server-Issues / Loader Issues

6 - Security Report

Reporting New Security Problems with Apache HugeGraph

Adhering to the specifications of ASF, the HugeGraph community maintains a highly proactive and open attitude towards addressing security issues in the remediation projects.

We strongly recommend that users first report such issues to our dedicated security email list, with detailed procedures specified in the ASF SEC code of conduct.

Please note that the security email group is reserved for reporting undisclosed security vulnerabilities and following up on the vulnerability resolution process.

Regular software Bug/Error reports should be directed to Github Issue/Discussion or the HugeGraph-Dev email group. Emails sent to the security list that are unrelated to security issues will be ignored.

The independent security email (group) address is: security@hugegraph.apache.org

The general process for handling security vulnerabilities is as follows:

- The reporter privately reports the vulnerability to the Apache HugeGraph SEC email group (including as much information as possible, such as reproducible versions, relevant descriptions, reproduction methods, and the scope of impact)

- The HugeGraph project security team collaborates privately with the reporter to discuss the vulnerability resolution (after preliminary confirmation, a

CVEnumber can be requested for registration) - The project creates a new version of the software package affected by the vulnerability to provide a fix

- At an appropriate time, a general description of the vulnerability and how to apply the fix will be publicly disclosed (in compliance with ASF standards, the announcement should not disclose sensitive information such as reproduction details)

- Official CVE release and related procedures follow the ASF-SEC page

Known Security Vulnerabilities (CVEs)

HugeGraph main project (Server/PD/Store)

- CVE-2024-27348: HugeGraph-Server - Command execution in gremlin

- CVE-2024-27349: HugeGraph-Server - Bypass whitelist in Auth mode

HugeGraph-Toolchain project (Hubble/Loader/Client/Tools/..)

- CVE-2024-27347: HugeGraph-Hubble - SSRF in Hubble connection page